Everyone’s Talking About MCP. I Built a Server to See If It’s Ready

Model Context Protocol (MCP) has received a lot of attention lately. Anthropic keeps promoting it as a unified protocol for LLMs. CursorAI now supports it as a client. Even big players like OpenAI, Azure, and Google are getting on board.

But do we really need yet another abstraction layer? Isn’t the good old REST API (in the form of OpenAI’s function calling) enough?

What MCP is and is not

Well, I was a little skeptical about MCP at first. OpenAI has had function calling for a while now, and at first glance, it seemed that MCP was no more than a marketing move from Anthropic. What caught my attention was a passage in the documentation about the protocol being inspired by VSCode’s Language Server. At GoodData, we created our own Language Server for our analytics as code features, and I’m a big fan of the protocol. It is more than just a set of rules and schemas for communication with your IDE, it is changing the way a language developer thinks about the integration. So, I got curious if MCP can do the same for LLM apps.

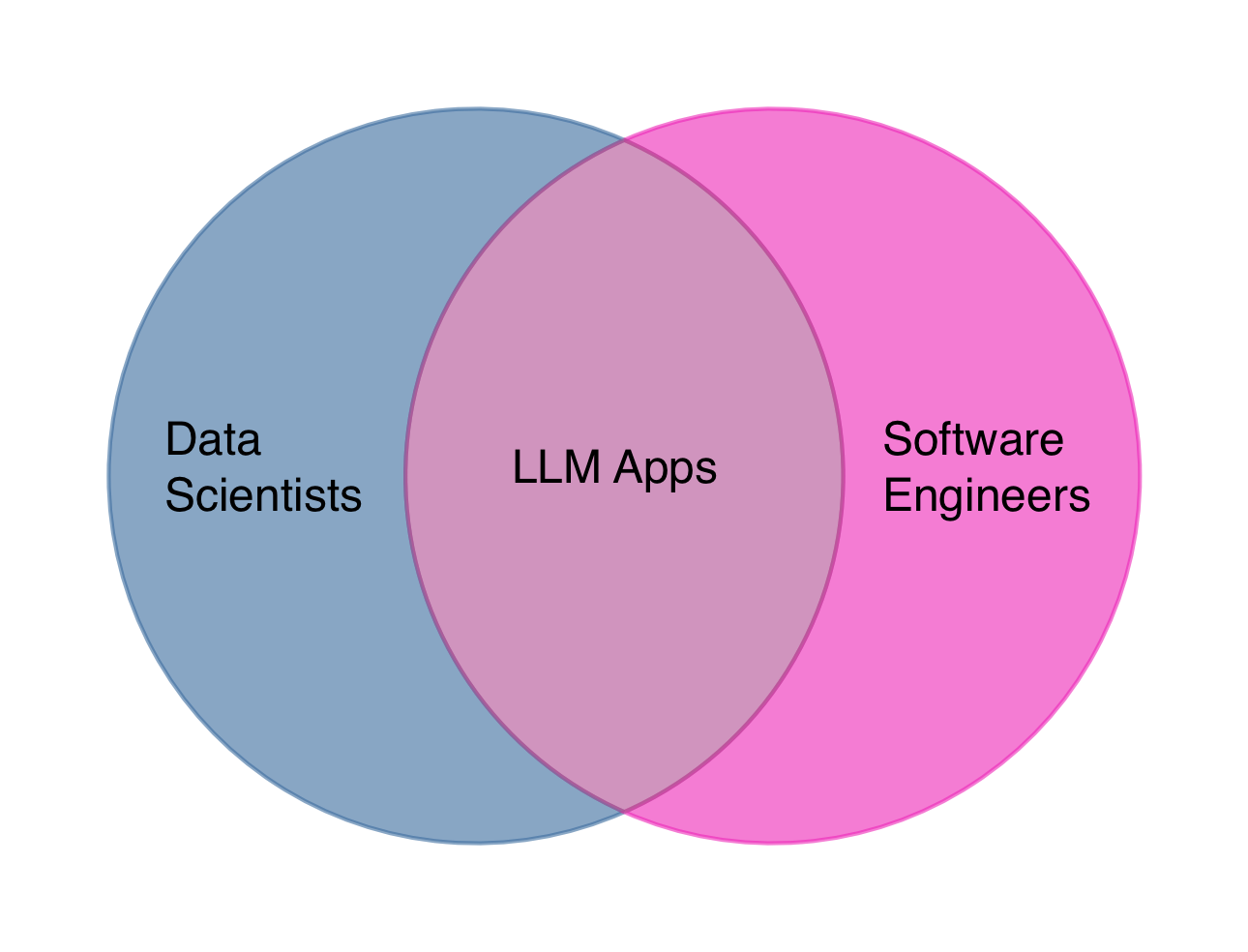

Building an AI agent, or even a simple reactive chatbot, is hard. You need to both understand how LLMs work and be a decent software engineer. A colleague of mine (hey, Jakub!) has described this well with a Venn diagram.

Model Context Protocol is a way to make LLM Apps’ development accessible to a wide variety of software engineers, even those with no data science background, such as myself. I no longer need to have a deep understanding of all the LLM oddities in order to create an agent, just as I don’t need to know how VSCode works under the hood in order to add support for my language with Language Server.

GoodData MCP Server PoC

There is no better way to learn about a new technology than to use it. So, I went and built a prototype MCP Server with just enough GoodData features to cover a simple usage scenario.

The whole flow: A user is looking for a data analytics visualization, checks it out in the chat interface and, finally, schedules a recurring email report.

As a starting point, I chose TypeScript SDK. There are plenty of relevant examples on GitHub using this SDK and it’s one of the official ones, supported by Anthropic.

As a client, I’m using the Claude Desktop app. Although some feature support is spotty, it is the official client from Anthropic.

If you want to see my implementation for yourself, check out this repository.

MCP First DevEx Impressions

MCP is still under heavy development, and this is immediately evident. Documentation is inconsistent at times, the official desktop client does not support all the MCP features, and there are inconsistencies between different server SDKs. This is understandable, given the pace at which MCP is evolving, and it is largely compensated by a plethora of examples on Github.

Your MCP server can deliver several types of integrations: Tools, Resources, Prompts, Sampling, and Roots.

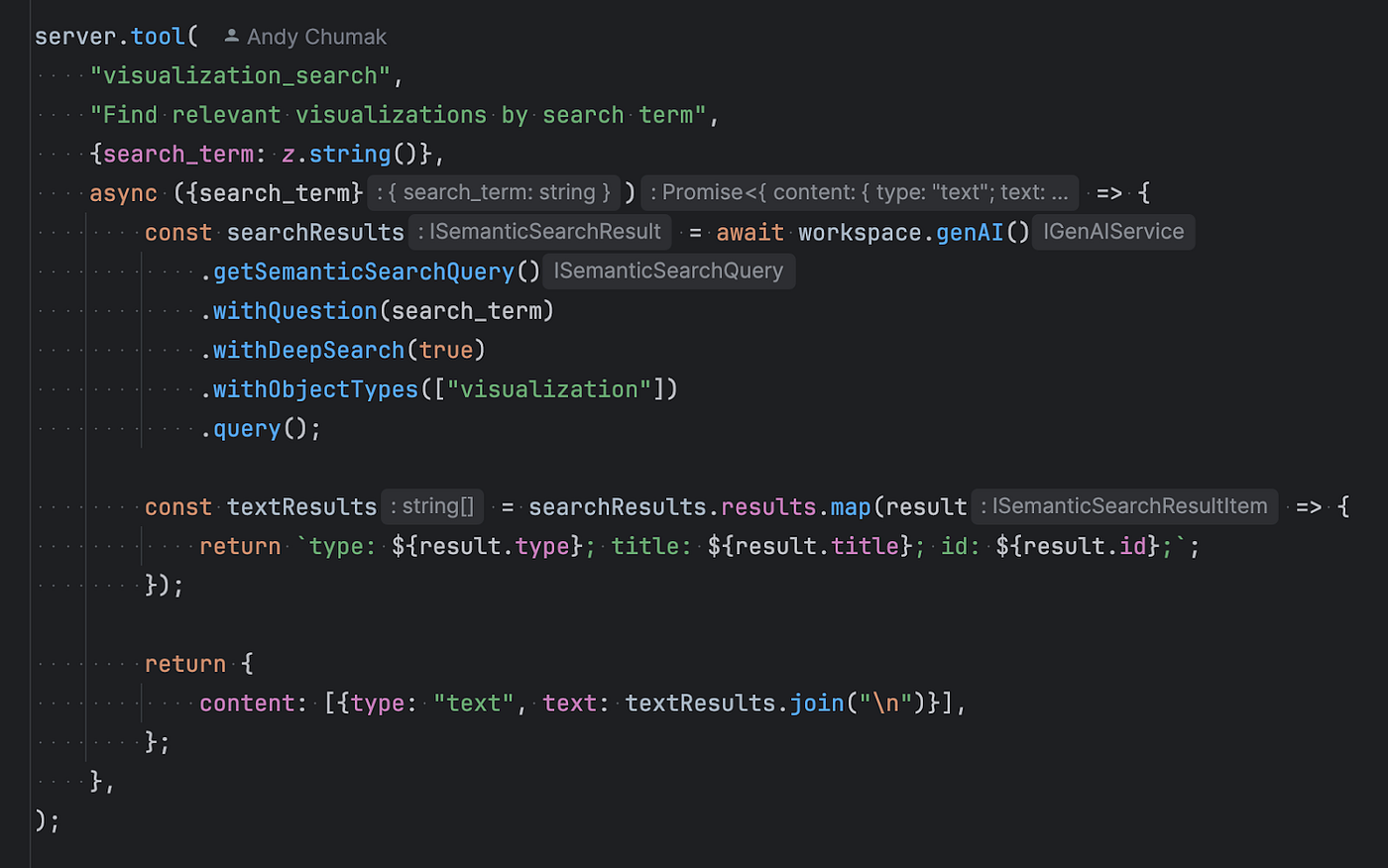

Tools

Tools are by far the most well-supported and robust type of integration. They have existed for a long while (remember OpenAI function calling?). Tools are also well-supported by Claude Desktop.

The idea is that you define a function name and a JSON Schema of the expected input, and the LLM will request the execution of the function with input based on the user prompt.

The main purpose of Tools is to provide a way for the AI Assistant to execute actions on the user’s behalf.

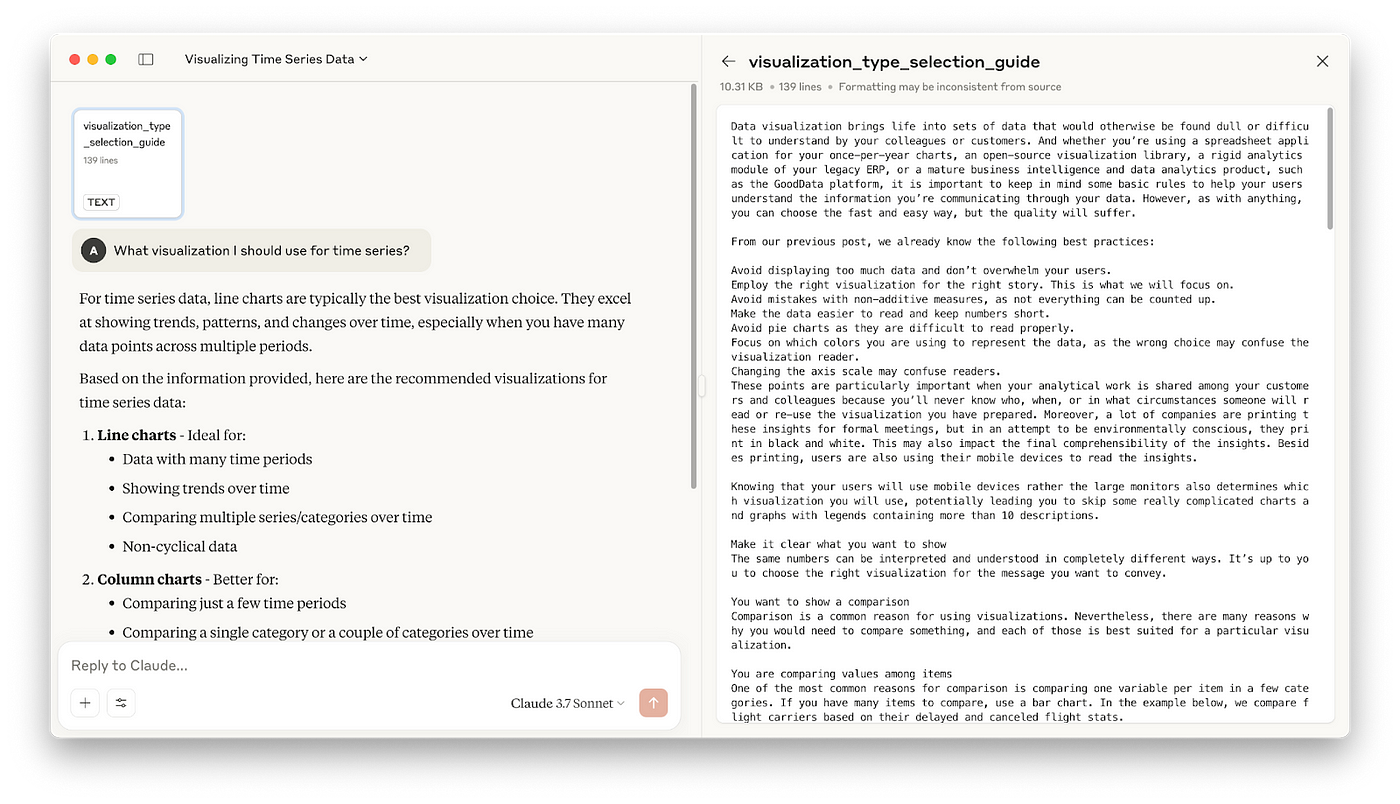

Resources

With Resources and Resource Templates, you can provide the LLM with the context it needs to reply to the user’s question. It can be anything from documentation in PDF files to JSON entities out of your REST API.

Personally, I can see how this may become one of the most powerful integration types in MCP. However right now, in Claude Desktop, the LLM can’t choose which resources to use on its own. The user has to explicitly include the resource in each question. This limits the usefulness of the Resources, and I hope this becomes seamless in the future.

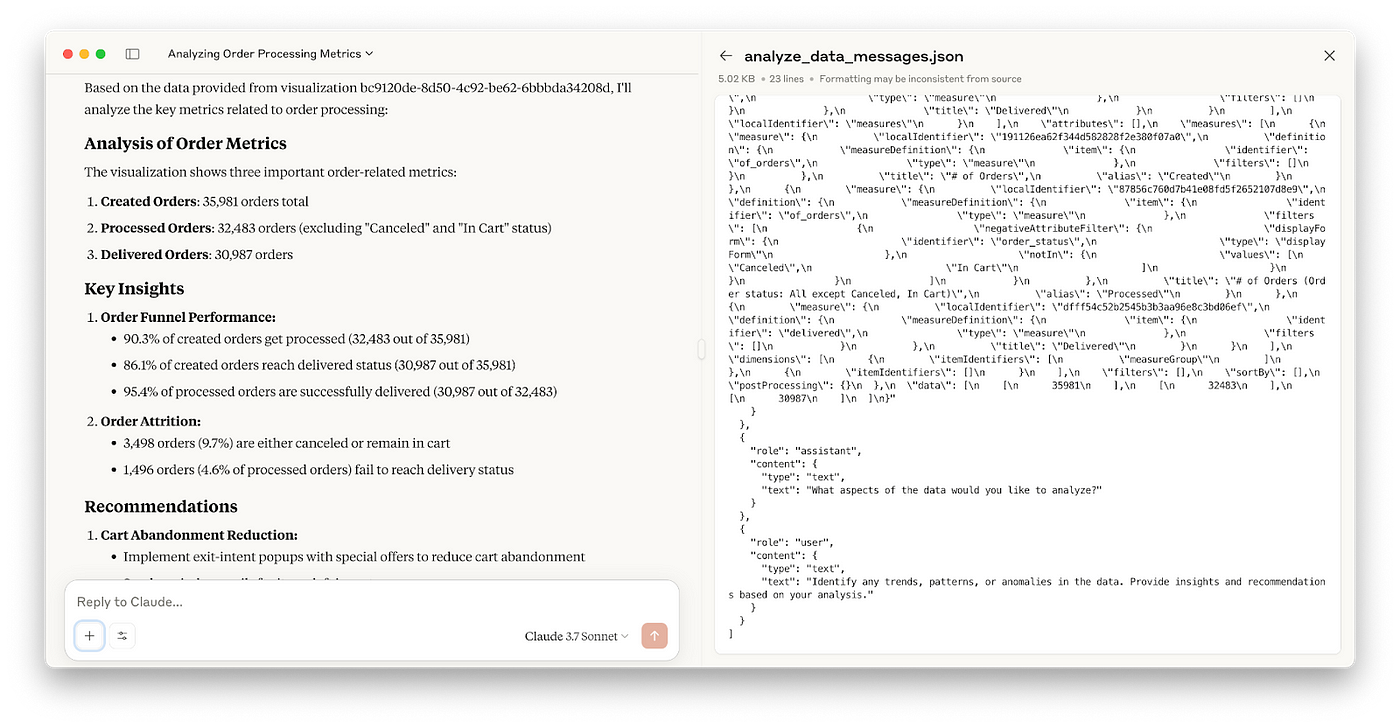

Prompts

Prompts is another integration type with great potential. At the surface level, Prompts are just prompt templates predefined by your MCP server. They provide the user with a standardized way to ask LLMs for certain tasks. The real power of Prompts, however, lies in parametrization and workflows. Think of this as Tools on steroids. Your MCP server can process the input arguments and output a set of instructions to the LLM on how to process the results, how to respond to the user, and which tools to use next in the pre-defined workflow.

Claude Desktop’s integration for Prompts is less fluent compared to Tools. The user needs to explicitly generate the prompt and provide the input parameters manually.

Sampling

Sampling is the latest addition to the MCP. It allows the server to ask for help from the LLM through the client, while keeping the human in the loop. This will be especially useful if you want to orchestrate complex multi-step tasks, like creating a data analytics visualization. Here is how it would work:

- Your user asks a question to create a visualization.

- Let’s say the user did not specify the exact visualization type they wanted. The MCP server can do some heuristics and narrow down the options based on the metrics and dimensionality, but in the end, it needs to decide whether to use a bar chart or a line chart. Something a quick call to the LLM can easily solve.

- The server sends a Sampling request to the client with the user’s original prompt, a narrowed-down list of supported visualizations, and a guideline on how to select the visualization type.

- Optionally, this is then displayed to the user, who can edit, approve, or even reject the LLM call. Users can also change the selected model.

- The client forwards it to the LLM and then routes the response back to the server.

This workflow ensures that all LLM communication always goes through the client, while the user has the final say in what to send to the LLM.

At the time of writing this article, Sampling is not yet supported by the Claude Desktop.

Roots

Roots is a way for an MCP client to tell an MCP server which resources it should be accessing for a given operation. A typical example would be a directory where the filesystem-operating MCP server should be working in. This is also applicable to API base URLs or any other URI-identified resources.

What’s next for MCP?

MCP has come a long way since it was first introduced, and I’m looking forward to seeing it mature further. Here are a few improvements I’d really like to see:

- More seamless integration for Resources, Prompts, and Sampling on the Claude Desktop side. I don’t want my users to manually pick the Resource or fill in the variables for a Prompt. Why not let the LLM choose to access these in the same way it does with Tools?

- I want to be able to add my own content types to MCP client, the server, and to the client UI. One good use case is GoodData’s data visualization. I can easily write a server that returns a GoodData-specific data visualization JSON, but there is no way I can render it in Claude Desktop’s UI, unless I export it to a plain, non-interactive image. Imagine if an MCP server could also provide a link to a WebComponent (React/Angular component) capable of rendering the new content type.

What’s in it for GoodData?

At GoodData, we are keeping a very close eye on MCP. You can expect to see more PoCs and eventually production integrations from our side. Here is a sneak peek into what’s brewing:

-

Now Anthropic has finished the specification for a remote MCP server, we’re trying that out and will eventually integrate it into our servers.

-

Some time ago we built the GoodData

to support our Analytics as Code format. Using this extension in VSCode-based AI-enabled IDE (like, Cursor) and adding an MCP server to it should be quite a powerful combination.

-

Last but not least, we are about to release

. Making it an MCP client will unlock a lot of new use cases. Imagine an analytics-focused assistant that our customers can connect to their own MCP servers and provide additional context and tooling.

Conclusion

MCP is a very promising technology. One can see all the effort the team at Anthropic put into the protocol design. It needs to mature, though. I would be hesitant to write a generic MCP server with anything other than Tools, unless I’m targeting a very specific MCP client implementation or, better yet, I’m writing my own.