Data Pipeline as Code: Journey of our Blueprint

In this article, I will take you on a journey of the past 18 months of the development of our demo data pipeline.

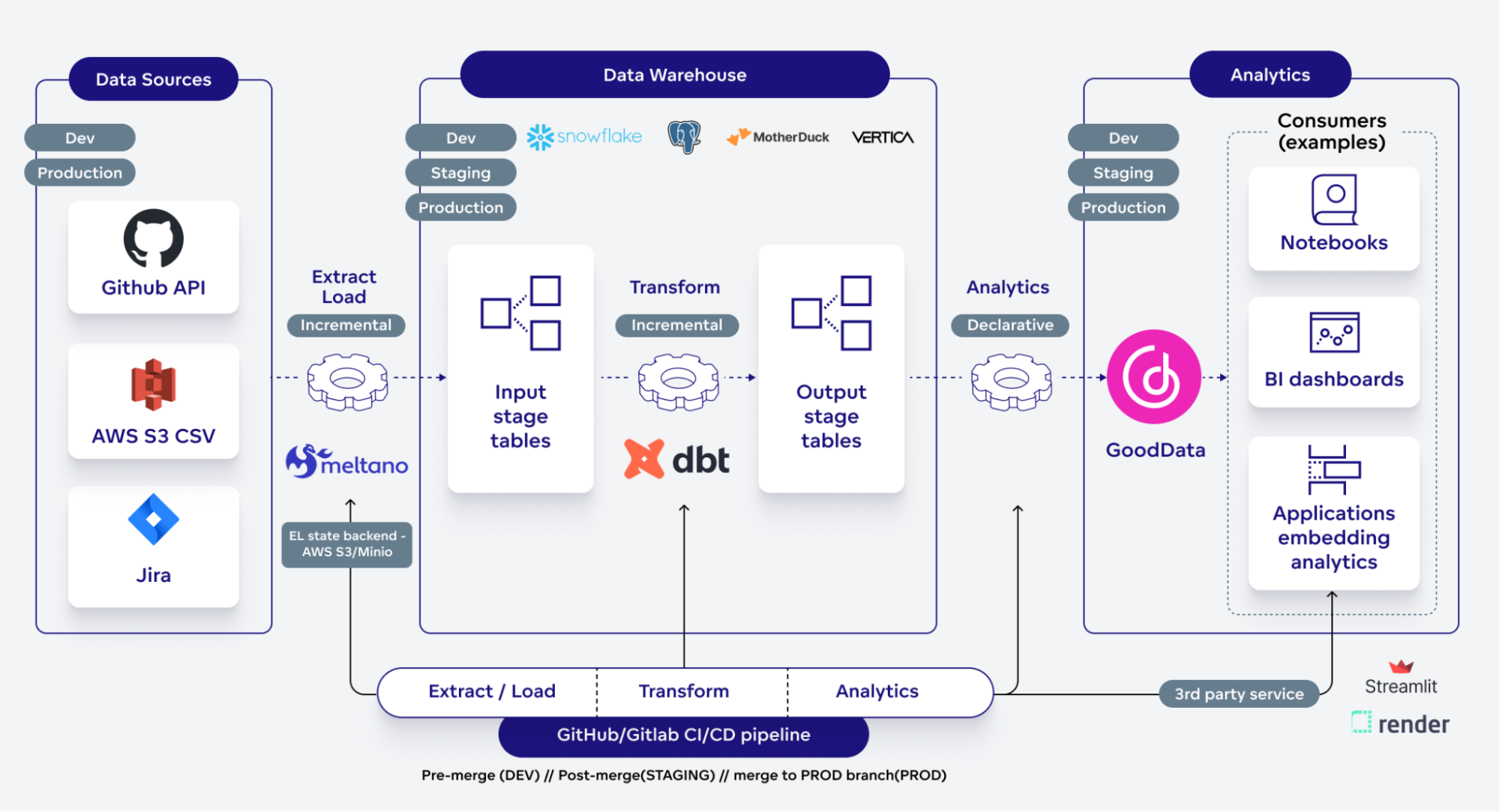

Everything started when my colleague Patrik Braborec published the article How to Automate Data Analytics Using CI/CD. Since then, I followed up with two articles: Extending CI/CD data pipeline with Meltano and more recently with Ready, set, integrate: GoodData-dbt integration is production-ready! The data pipeline has matured since the beginning of this journey, so let me show you the current state of it. Let’s call it CI/CD Data Pipeline Blueprint v1.0.

The pipeline follows these important concepts:

- Simplicity -Utilizes docker-compose up or Makefile targets for straightforward onboarding. Even a simple pull request can introduce a new data use case.

- Openness -Flexibility of swapping source connectors or loaders (DWH).

- Consistency -A single pull request can update each step: extract, load, transform, analytics, and custom data apps.

- Safety -Ensures gradual rollout in DEV, STAGING, and PROD environments, with extensive automatic tests.

In the article, we will:

- Deep dive into the above concepts.

- Demonstrate end-to-end change management on a particular pull request.

- Document the limitations and potential follow-ups.

Context

The blueprint source code is open-sourced with the Apache license in this repository.

Note: some of you may notice that the repository moved from GitLab to GitHub. I decided to provide a GitHub Actions alternative to GitLab CI. You can look forward to a follow-up article making a comprehensive comparison of these two leading vendors.

Simplicity

I'm a huge fan of simple onboarding - it's the heart of user/developer experience! That’s why we have two onboarding options:

- Docker-compose

- Makefile targets

Opt for the simplest route by running “docker-compose up -d”. This command fires up all essential long-term services, followed by executing the extract, load, transform, and analytics processes within the local setup.

Alternatively, executing make <target> commands, like make extract_load, does the same trick. This works across various environments - local or cloud-based.

To enhance the onboarding further, we provide a set of example .env files for each environment (local, dev, staging, prod). To switch environments, simply execute source .env.xxx.

Now, you might wonder where’s the pipeline. Well, I've got you covered with two exemplary pipeline setups:

Feel free to fork the repository and reuse the definition of the pipeline. As it’s a set of straightforward YAML definitions, I believe everyone can understand it and tweak it based on his/her needs. I've personally put them to the test with our team for internal analytics purposes, and the results were impressive! ;-)

Openness

Meltano, seamlessly integrating Singer.io in the middle of the extract/load process, revolutionizes switching both extractors(taps) and loaders(targets). Fancy a different Salesforce extractor? Just tweak four lines in meltano.yaml file, and voilá!

Want to replace Snowflake with MotherDuck for your warehouse tech? A similar, hassle-free process! And as a bonus, you can run both options side-by-side, allowing for a detailed comparison 😉

Some extractor/loader that doesn't quite meet your needs? No sweat. Fork it, fix it, direct meltano.yaml to your version, and await its merge upstream.

What if your data source is unique, like for one of our new clients with their APIs as the only source? Well, here you can get creative! You could start from scratch, or use the Meltano SDK to craft a new extractor in a fraction of the usual time. Now that’s what I’d call a productivity boost! 😉

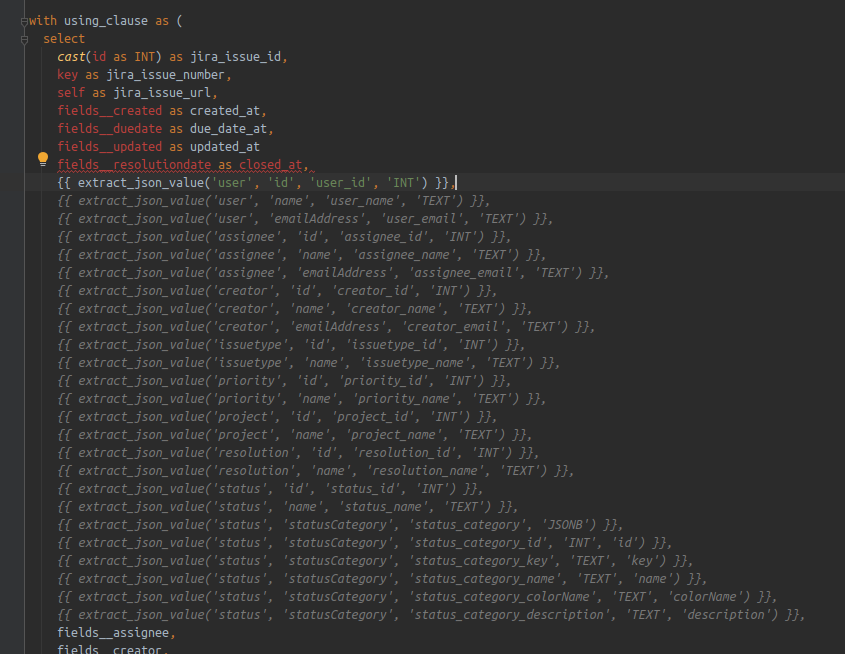

But wait, there's more – what about data transformations? Databases have their own SQL dialects, so when dealing with hundreds of SQL transformations, how can you switch dialects effortlessly?

Enter dbt with their Jinja macros. Replace specific commands, like SYSDATE, with macros (e.g., {{ current_timestamp() }}). Stay consistent with these macros, and changing database technologies becomes a breeze. And if you need a macro that doesn’t exist yet? Just write your own!

Here is a quick example of extracting values from JSON.

This flexibility offers another immense benefit - drastically reducing vendor lock-in. Unhappy with your cloud data warehouse provider's costs? A few lines of YAML are all it takes to transition to a new provider.

Now onto GoodData

GoodData effortlessly connects with most databases and uses database-agnostic analytics language. So no need to overhaul your metrics/reports with a ton of SQL rewrites; MAQL remains consistent regardless of the database.

And what if you’re eyeing a switch from GoodData to another BI platform? While we’d love to keep you with us, we are quite open in this case, no hard feelings.😉

Firstly, we provide full access through our APIs and SDKs. Want to transfer your data to a different BI platform using, say, Python? No problem!

Secondly, GoodData offers Headless BI. This means, that you can link other BI tools to the GoodData semantic model and perform reporting through it. This is incredibly useful when the new BI tool lacks an advanced semantic model and you want to maintain complex metrics without duplicities. It’s also very useful for lengthy migrations or consolidating multiple BI tools within your company. In short… Headless BI is your friend!

On a personal note, I'm actively involved in both the Meltano and dbt communities, contributing wherever I can, like speeding up Salesforce API discovery and adding Vertica support to Snowflake-labs/dbt constraints

Consistency

Consistency is the backbone of data pipeline management.

Ever made a single change in an SQL transformation, only for it to unexpectedly wreak havoc across multiple dashboards? Yeah, well, I did. It’s a clear reminder of how interconnected every element of an end-to-end pipeline truly is. Ensuring consistent delivery of changes throughout the entire pipeline is not a nice to have; it’s critical.

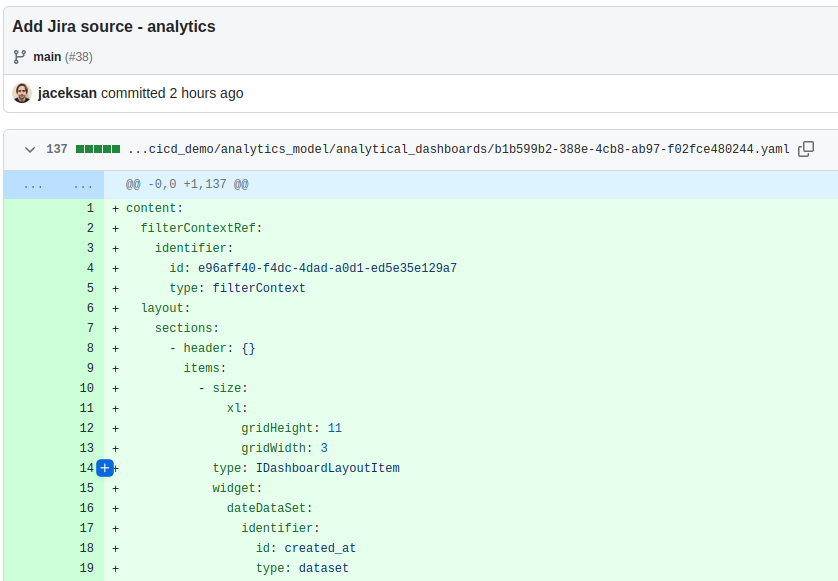

The go-to solution? Embedd all artifacts within a version control system like git. It’s not only about delivering the changes consistently, it opens up a world of possibilities, like:

- Upholding the ‘four eyes’ principle with code reviews

- Tracking and auditing changes - know who did what

- The ability to smoothly roll back to any preview version if needed

- Conduct thorough scans of the source code for potential security vulnerabilities.

However, all tools must support the ‘as-code’ approach. The ability to implement changes through code, leveraging appropriate APIs, is key. Tools that solely rely on a UI experience for business users don’t quite make the cut here.

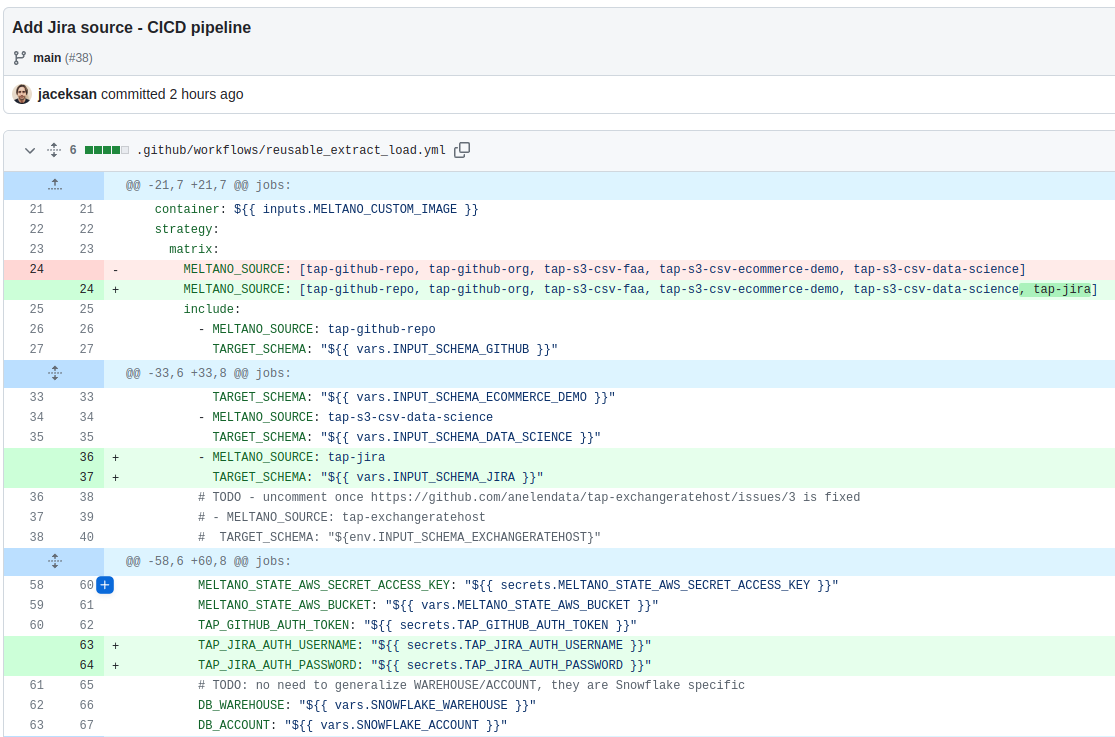

To give you a taste of how this works in practice, let's take a look at a recent Pull Request where we introduced a new source - Jira.

I'll dive into the specifics of this process in the demo chapter below.

Safety

Productivity thrives in a safe environment. Imagine being afraid to deliver a change to your data pipeline because you don’t know what you can break - a nightmare scenario.

Firstly, as mentioned in the consistency chapter, there’s significant value in being able to roll out a change end-to-end within a single pull request.

That's a big win, indeed! But that alone isn't enough. How can you be certain your changes won't disrupt the production environment, potentially even catching the CEO's eye during their dashboard review?

Let’s look at my approach based on a few key principles:

- Develop and test everything locally. Be it against a DEV cloud environment or a completely self-contained setup on your laptop.

- Pull Requests trigger CI/CD that deploys to the DEV environment. Here, tests run automatically in a real DEV setting. Code reviews are done by a different set of eyes. The reviewer may manually test the end-user experience in the DEV environment.

- Post-merge proceeds to the STAGING environment for further automated testing. Business end-users get a chance to test everything thoroughly here.

- Only when all stakeholders are satisfied, the pull request advances to a special PROD branch. After merging, the changes are live in the PROD environment.

- At any point in this process, if issues arise, a new pull request can be opened to address them.

But how can we ensure each environment runs in isolation? There are numerous strategies:

- For extract/load (Meltano) and transform (dbt), ensure they're executed against live environments.

- Implement dbt tests - they’re invaluable.

- Use the gooddata-dbt plugin CLI to run all defined reports.

As you can see, this system of isolated environments, adhering to the four-eyes principle, the capability to test in live settings, and more all contribute to significantly reducing risks. In my opinion, this framework is a safe working environment.

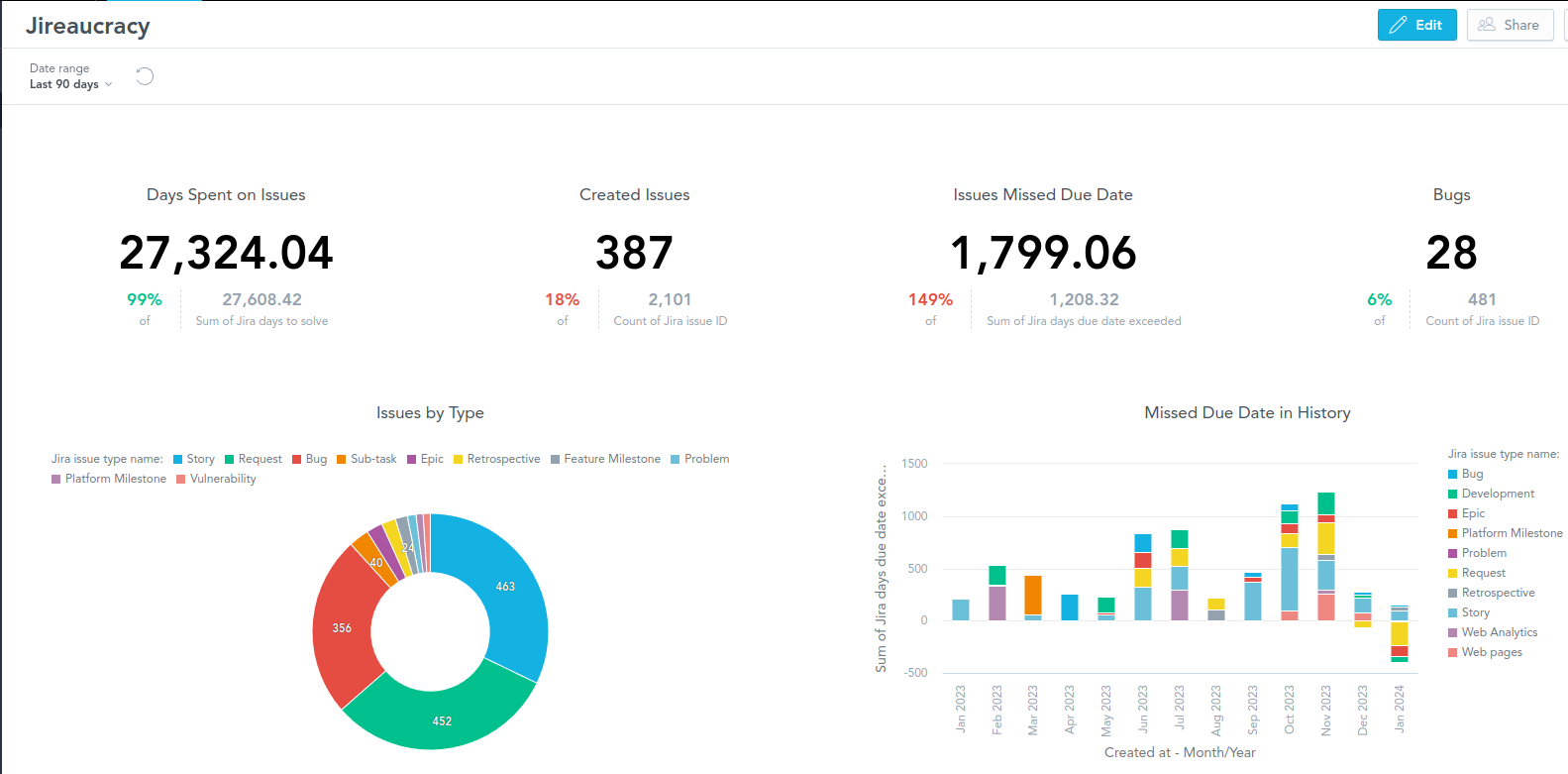

Demo - Jireaucracy

Recently, in our company, we've been battling with Jira tickets, leading to a new term - 'Jireaucracy'. It's a blend of seriousness and humor, and we developers, well, we enjoy a good troll.

So, I went on a mission to crawl Jira data and put together the first PoC for a data product. But here’s the thing - integrating such a complex source as Jira might seem out of the scope of this article, right? Jira’s data (domain) model is notoriously complex and adaptable, and its API? Not exactly user-friendly. So, you might wonder, is it even possible to integrate it (in a relatively short time) using my blueprint?

Absolutely! 😉

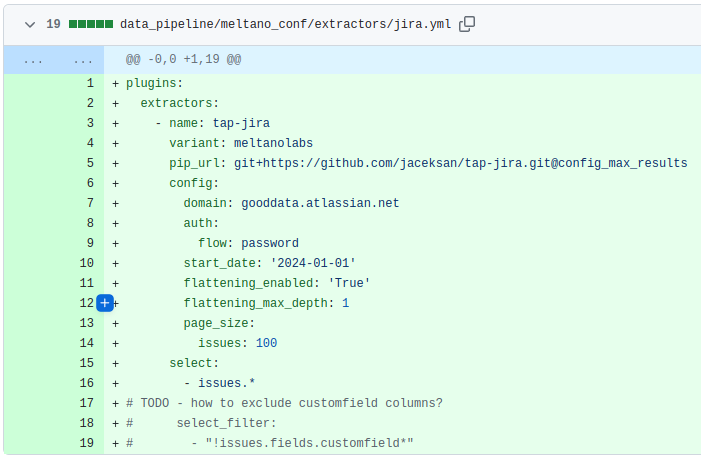

Enter the Meltano plugin tap-jira. I crafted a database-agnostic macro for extracting paths from JSON columns. We've got all the tools needed to build this!

Check out the pull request. It’s laid out step by step(commit by commit) for your review. Allow me to guide you through it.

Adding a new source cannot be easier!

I even implemented a missing feature to the tap-jira extractor - to allow configuring page_size for issues stream. Now I redirected pip_url to my fork, but I created a pull request to the upstream repository and notified the Meltano community, so soon I will redirect it back.

Update after two days: My contribution has been successfully merged into the main project! 🙂

The next step, adding the related transformation(s), gets complicated. But no fear - SQL is your friend here. It’s possible to do it incrementally. I copy-pasted a lot from an existing solution for GitHub. And guess what? Working increasingly with GitHub Copilot in this repository, I barely had to write any boilerplate code. Copilot was my trusty sidekick, suggesting most of it for me! 😀

The GoodData part? Piece of cake! The Logical Data Model is auto-generated by the gooddata-dbt plugin. I whipped up a new dashboard with six insights (that I believe are meaningful) in our dashboarding UI in just a few minutes. Then, I synced it as code (using gooddata-dbt store_model) to the repository.

And finally, let’s talk about extending the CICD pipeline. It was a walk in the park, really. Here’s the pipeline run, which was triggered even before the merge, allowing the code reviewer to click through the result (dashboard).

From start to finish, building the entire solution took me roughly 4 hours. This included chatting on Meltano Slack about potential improvements to the developer experience.

Just think about the possibilities – what a team of engineers could create in several days or weeks!

P.S. Oh, and yes, I didn’t forget to update the documentation (and corrected a few bugs from last time 😉).

Downsides & Follow-ups

Let's face it: there's no one-size-fits-all blueprint in the data world. This particular blueprint shines in certain scenarios:

- Handling smaller data volumes, think of millions of rows in your largest tables.

- Projects where advanced orchestration, monitoring, and alerting aren't critical.

But what if you need to scale up? Need to fully load billions of rows? Or manage thousands of extracts/loads, maybe per tenant, with varied scheduling? If that's the case, this blueprint, unfortunately, might not be for you.

Also, it's worth noting that end-to-end lineage doesn't get first-class treatment here. A feature I am missing is more nuanced cost control, like utilizing serverless architecture.

That’s why I plan to introduce an alternative blueprint, one I plan to develop and share with you all. Some thoughts brewing in my mind:

- Enhancing Meltano's performance. While Singer.io, its middle layer, offers the flexibility to switch extractors/loaders, it does come at the price of performance. Is there a way to rework Singer.io to overcome this? I'm all in for contributing to such a venture!

- Exploring a more robust alternative to Meltano. I'm currently in talks with folks from Fivetran, but the quest for an open-source equivalent is still ongoing.

- Integrating an advanced orchestration tool, ideally, one that champions end-to-end lineage along with monitoring and alerting capabilities. Currently, I have Dagster on my radar.

Final Words

And there you have it - the first blueprint is now in your hands. I truly hope you find value in it and give it a try in your projects. If you have any questions, please don’t hesitate to contact me or my colleagues. I also recommend joining our Slack community!

Stay tuned here for more and upcoming articles. I encourage you to get involved in the Meltano/dbt/Dagster/… open source communities. Your contributions can make a significant difference. The impact of open source is far-reaching – just consider how open-source Large Language Models, which are at the heart of today’s generative AIs, are making waves! Let's be part of this transformative journey together.

Try Blueprint or GoodData Yourself!

The open-source blueprint repository is here - I recommend you to try it with our free trial here.

If you are interested in trying GoodData experimental features, please register for our Labs environment here.