Ready, set, integrate: GoodData-dbt integration is production-ready!

We proudly announce that GoodData and dbt (Cloud) integration is now production-ready!

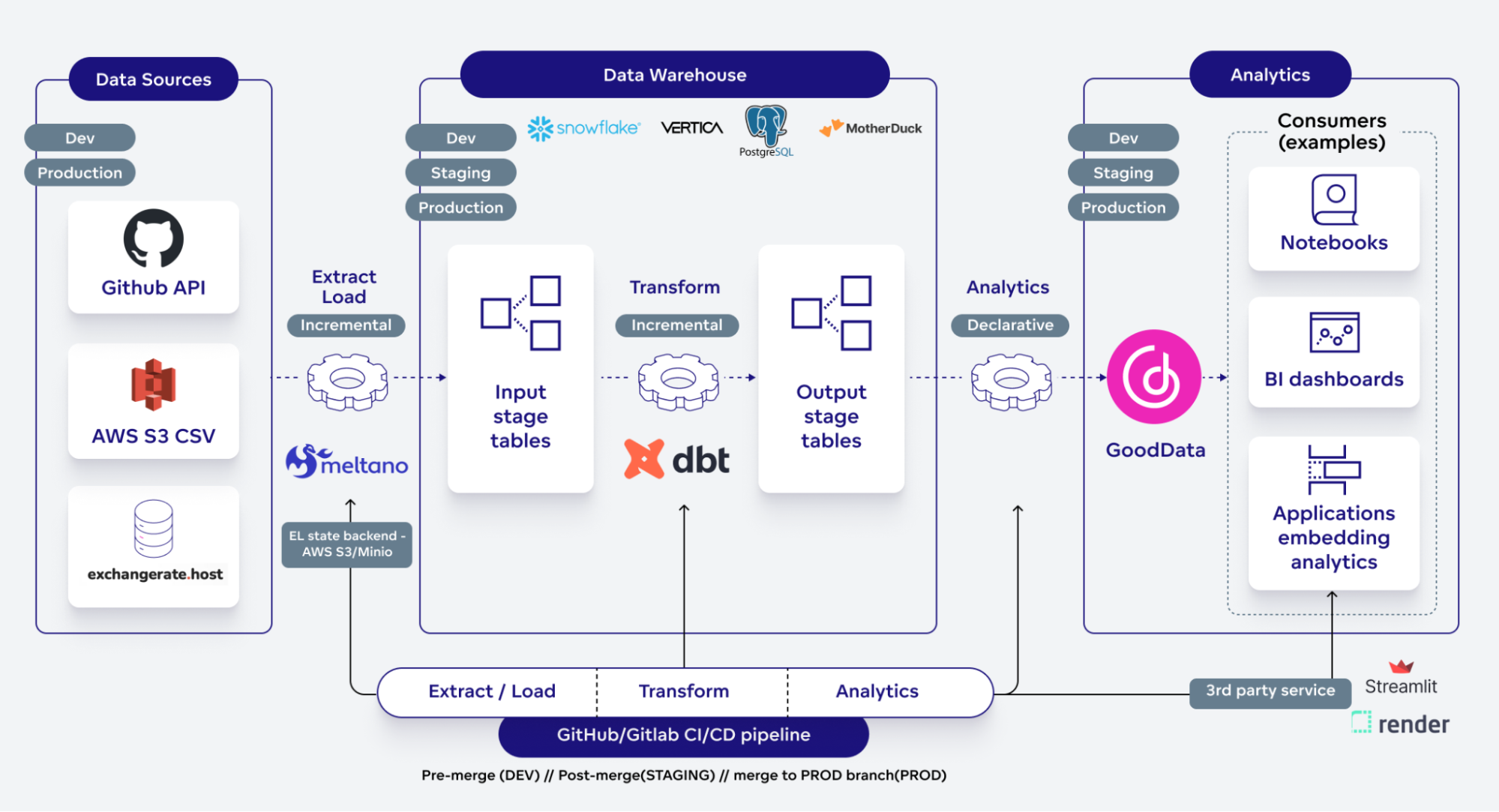

This article demonstrates all the related capabilities. The integration is part of a data pipeline blueprint, already described in several articles:

We back it up with an open-source repository containing this data stack:

End-to-end data pipeline. Starting with extracting data from various data sources and ending up with a standard dashboarding tool, custom data app, or (ML) notebook. Everything is consistent because it is stored, versioned, and orchestrated by GitHub or GitLab.

Note: All links to the open-source repo lead to GitHub. Older articles point to the GitLab repository. They are alternatives - the data pipeline source code is the same, but the CI pipeline definition is different.

dbt is …

Let’s be brief, as many of you are likely familiar with dbt.

dbt (never use upper-case!) became a de facto standard for the T (Transform) in ELT because:

- It’s open-source, making it accessible and adaptable for a wide range of users

- Dbt Labs built an incredible community

- It is easy to onboard and use - perfect documentation and examples

- Jinja macros - decoupling from SQL dialects, generally do anything non-SQL

- First-class support for advanced T use cases, e.g., incremental models

Once dbt became a “standard”, they expanded their capabilities by introducing metrics. First, they built them in-house, but they weren’t successful. So they deprecated them, acquired Transform, and embedded MetricFlow metrics into dbt models.

Users can now execute metrics through APIs and store results in a database. Many companies compete in this area, including GoodData — we’ll see if dbt succeeds here.

dbt is now trying to build a SaaS product called dbt Cloud — with some of the features, like metrics, available only in the Cloud version of their product.

Dbt also holds the dbt Coalesce conference every year. We attended the last one, and I had a presentation there, briefly describing what I will talk about in this article.

The GoodData team at Coalesce

Me presenting the end-to-end data pipeline.

Dilemma - level of integration of BI and dbt

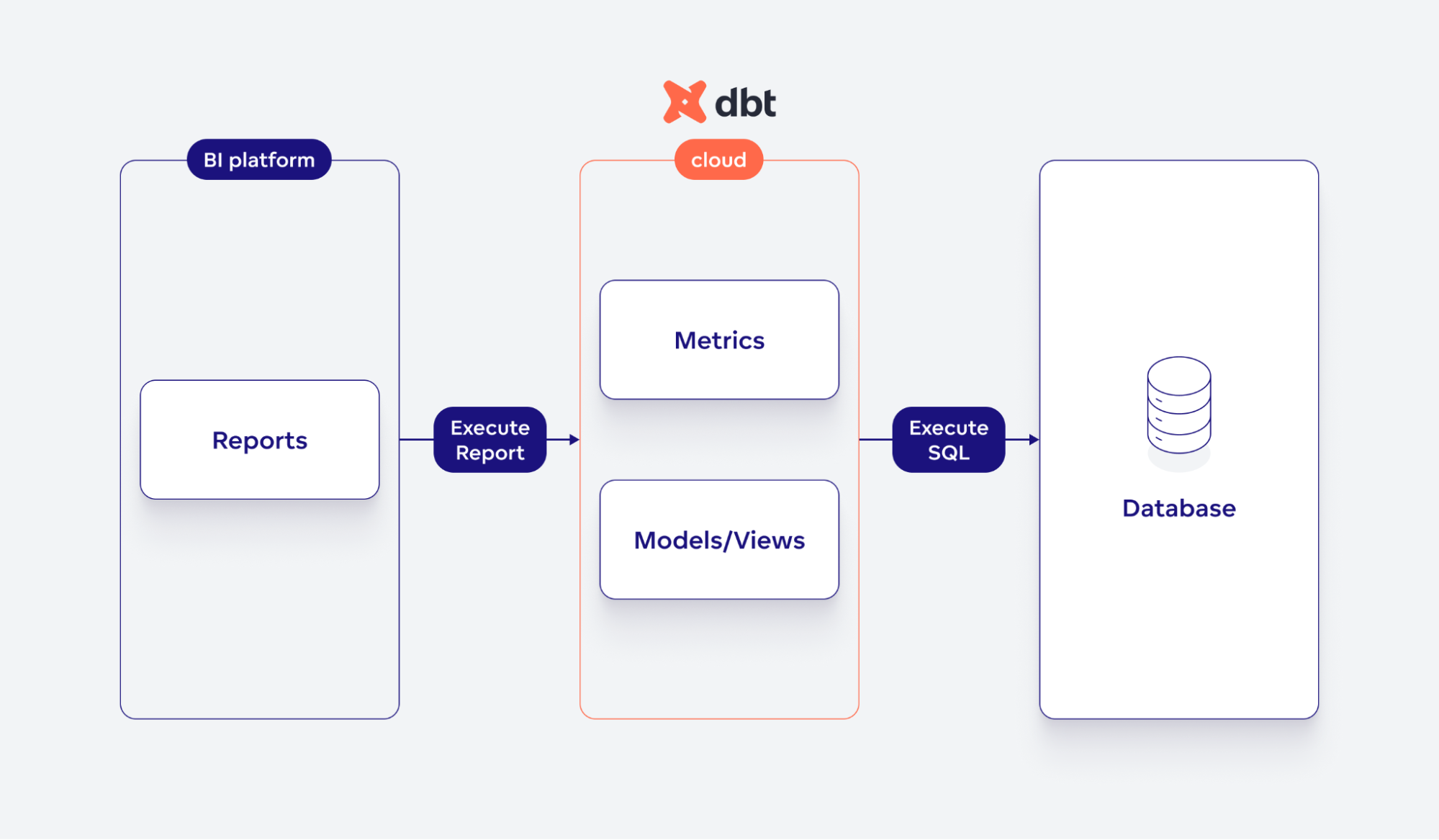

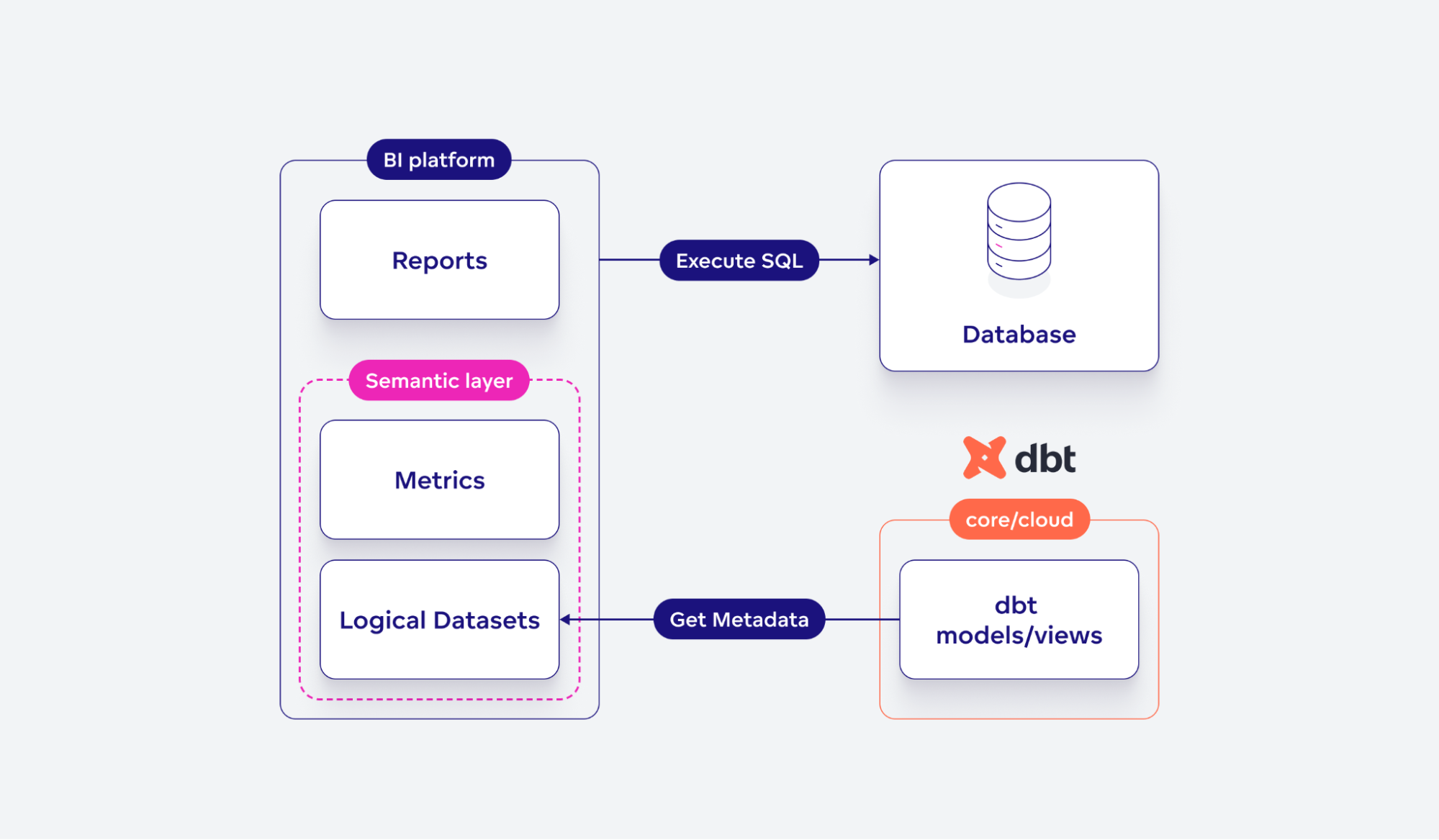

Generally, there are two options for how BI platforms can integrate with dbt:

- Complete integration with dbt Cloud, including semantic layer APIs

- Partial, generating BI semantic layer from dbt models

The option depends on whether a BI platform contains its semantic layer, which provides significant added value compared to the dbt semantic layer. We believe this is the case with GoodData - we have been developing our semantic layer for over ten years, while the dbt semantic layer went GA a few months ago.

We have also developed a sophisticated caching layer utilizing technologies such as Apache Arrow. I encourage you to read How To Build Analytics With Apache Arrow.

However, the dbt semantic layer can mature over time, and adoption can grow. We already met one prospect who insisted on managing metrics in dbt (not because they are better but because of other external dependencies). If more and more prospects approach us this way, we may reconsider this decision. There are two possible next steps:

- Automatically convert dbt metrics to GoodData metrics

- Integrate with dbt Cloud APIs, let dbt generate SQL and manage all executions/caching

You can review the architecture for each option:

BI platform does not contain any semantic layer and does not generate SQL from metrics. It fully integrates with dbt Cloud APIs, which can generate SQL from metrics (in context), execute SQL, and cache results.

BI platform contains a semantic layer. It can generate SQL from metrics and a logical data model(datasets). It can execute SQL and cache results.

Generating BI semantic layer from dbt models

The semantic layer, as we perceive it, consists of two parts:

- Logical Data Model (LDM - datasets) on top of the physical data model(tables)

- Metrics aka Measures

Why waste time on LDM? We think extending tables with semantic information is crucial to allow end users to be self-service.

Specifically:

- Distinguish attributes, facts, and date(time) dimensions so they are used in the right context

- Decouple analytics objects (metrics, reports, etc…) from tables

- Moving a column to a different table. With LDM, you don’t have to update analytical objects, just the mapping between LDM and tables

In this case, we want to generate our LDM(datasets) from dbt models(tables). Then users can create our MAQL metrics conveniently - thanks to LDM, we can provide a more powerful IntelliSense, e.g., we suggest attributes/facts in relevant positions in metrics or suggest filter values.

Moreover, we generate definitions of data sources from the dbt profile file(from targets/outputs), so users do not have to redundantly specify data source properties(host, port, username, …).

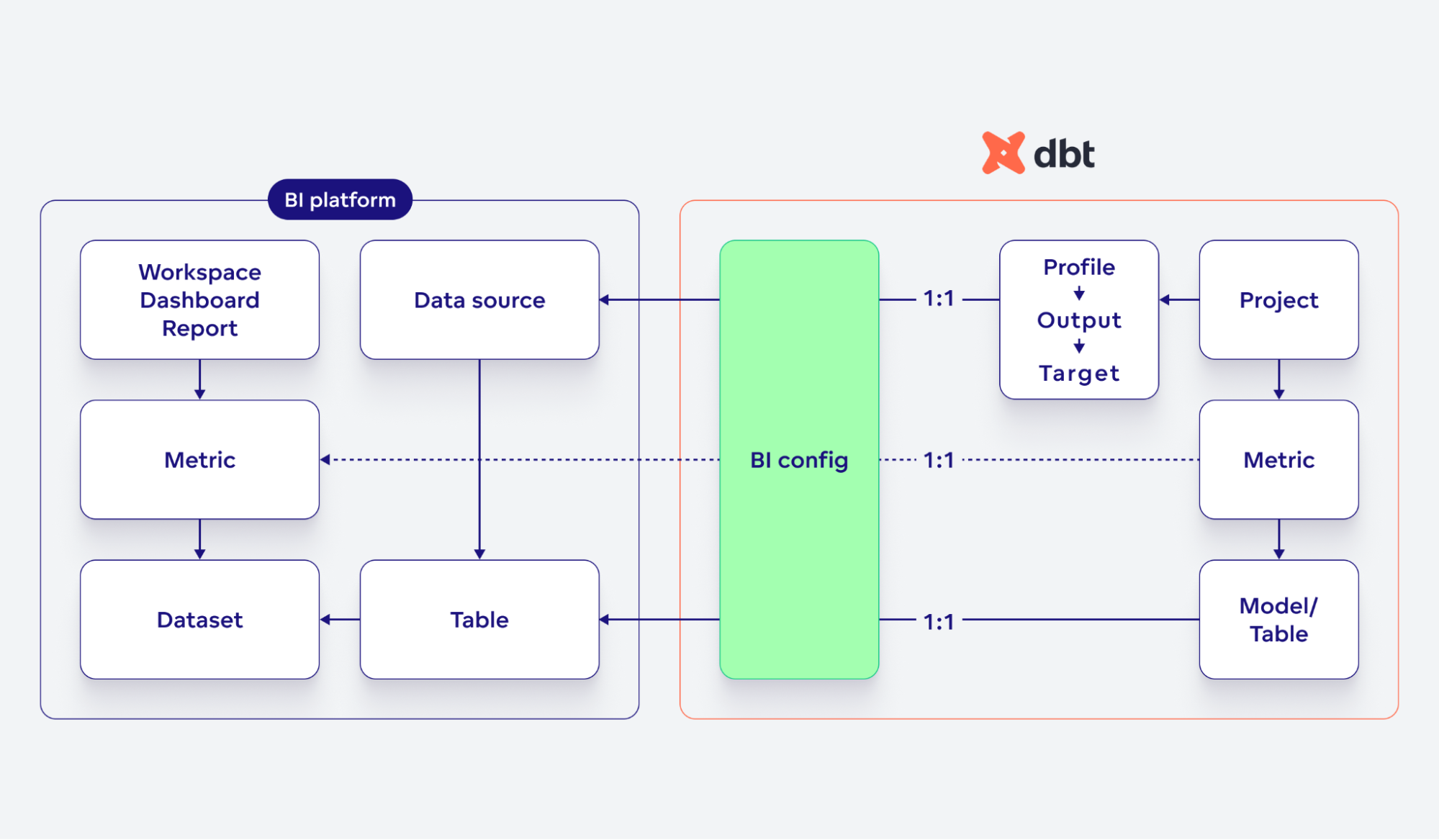

Here is a big picture describing relationships between dbt and BI entities:

Relationships between BI and dbt objects. They are mapped to each other in a BI config file.

Developer experience

So, what does the integration look like?

We always focus on developers first. We extended our (open-source) Python SDK because Python language is the first-class citizen in the dbt world.

We released a new library, gooddata-dbt, based on the gooddata-sdk core library.

How can developers embed the new library into their data pipelines?

We extended the data pipeline blueprint accordingly to demonstrate it. The library now provides gooddata-dbt CLI, which you can execute in a pipeline. The CLI has all the necessary operations developers need. The CLI accepts various (documented) arguments and a configuration (YAML) file.

Configuration

You can find the example configuration file here. Let’s break it down.

The blueprint recommends delivering into multiple environments:

- DEV - before the merge, supports code reviews

- STAGING - after the merge, supports business testers

- PROD - merge to a dedicated PROD branch, supports business end users

Corresponding example configuration:

environment_setups:

- id: default

environments:

- id: development

name: Development

elt_environment: dev

- id: staging

name: Staging

elt_environment: staging

- id: production

name: Production

elt_environment: prod

It is possible to define multiple sets of environments and link them to various data products.

Then you configure so-called data products. They represent an atomic analytical solution you want to expose to end users. The CLI creates a workspace (isolated space for end users) for each combination of data product and environment, e.g., Marketing(DEV), Marketing(STAGING), …, Sales(DEV), …

Corresponding example configuration:

data_products:

- id: sales

name: "Sales"

environment_setup_id: default

model_ids:

- salesforce

localization:

from_language: en

to:

- locale: fr-FR

language: fr

- locale: zh-Hans

language: "chinese (simplified)"

- id: marketing

name: "Marketing"

environment_setup_id: default

model_ids:

- hubspot

# If execution of an insight fails, and you need some time to fix it

skip_tests:

- "<insight_id>"

You link each data product with the corresponding environment setup. Model IDs link to corresponding metadata in dbt models. The localization section allows you to translate workspace metadata to various languages automatically. With the skip_tests option, you can temporarily skip testing broken insights(reports), which gives you (or even us if there is a bug in GoodData) time to fix it.

Finally, you can define which GoodData organizations (DNS endpoints) you want to deliver the related data products. It is optional - it is still possible to define GOODDATA_HOST and GOODDATA_TOKEN env variables and deliver the content to only one organization.

organizations:

- gooddata_profile: local

data_product_ids:

- sales

- gooddata_profile: production

data_product_ids:

- sales

- marketing

You can deliver data products to multiple organizations. Each organization can contain a different (sub)set of products. The gooddata_profile property points to a profile defined in gooddata_profiles.yaml file. You can find more in our official documentation.

Provisioning workspaces

Workspaces are isolated spaces for BI business end users. The CLI provisions a workspace for each data product and environment, e.g., Marketing(STAGING):

gooddata-dbt provision_workspaces

Registering data sources

We register a data source entity in GoodData for each output in the dbt profiles.yml file. It contains all properties necessary to connect to the corresponding database.

gooddata-dbt register_data_sources $GOODDATA_UPPER_CASE --profile $ELT_ENVIRONMENT --target $DBT_TARGET

If you fill GOODDATA_UPPER_CASE with “–gooddata-upper-case,” the plugin expects that the DB object names (tables, columns, …) are exposed upper-cased from the database. Currently, this is the case only for Snowflake.

--profile and –target arguments point to the profile and the target inside the profile in the dbt_profiles.yml file, from which the GoodData data source entity is generated.

Generating Logical Data Model(LDM)

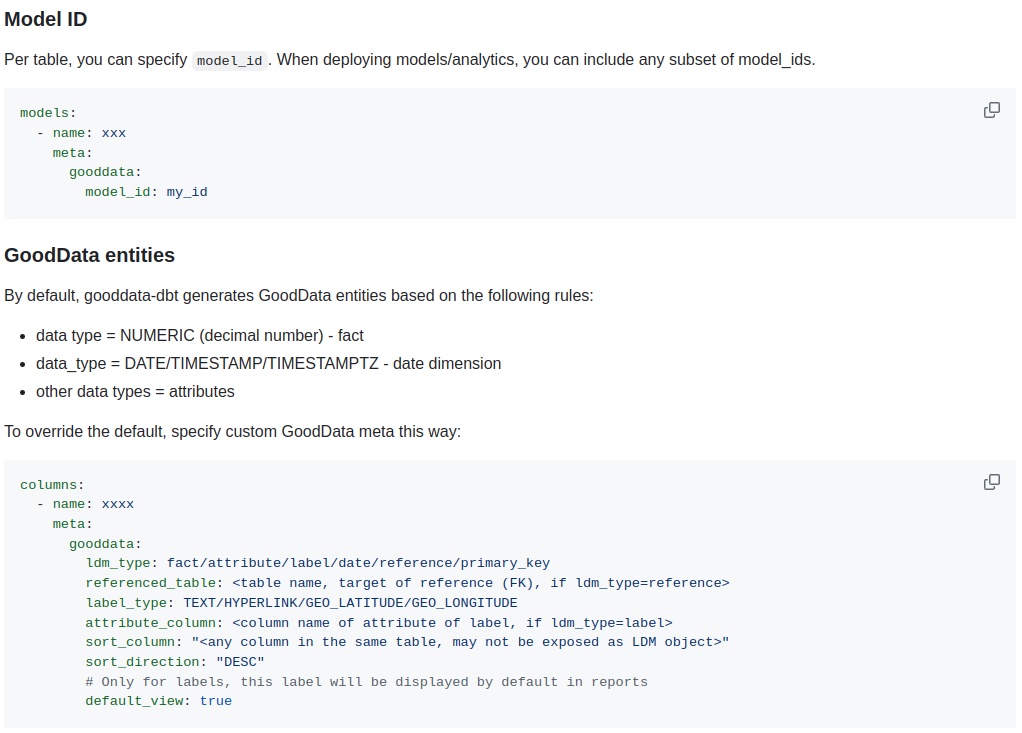

The CLI generates a logical dataset for each dbt model(table). The configuration (see above) defines which tables are included (labeled by a model_id in the meta section). You can improve the accuracy of the generation by setting GoodData-specific metadata in corresponding dbt model files(DOC in the repo).

Documentation for how dbt models can be extended by GoodData-specific metadata.

How to execute it:

gooddata-dbt deploy_ldm $GOODDATA_UPPER_CASE --profile $ELT_ENVIRONMENT --target $DBT_TARGET

The meaning of arguments is the same as in the previous chapter.

Invalidating GoodData caches

When dbt executions update the tables included in the LDM, GoodData caches are no longer valid. So once you execute dbt CLI and update the data, you must invalidate corresponding caches in GoodData.

How to invalidate:

gooddata-dbt upload_notification --profile $ELT_ENVIRONMENT --target $DBT_TARGET

The meaning of the arguments is the same as in the previous chapter

End-to-end example

We need to:

- Build a custom dbt docker image, including the needed plugins

- Execute dbt models with dbt-core or dbt Cloud(API)

- Provision the related GoodData artifacts (Workspaces, LDM, DataSource definitions)

Here is the source code of the related GitHub workflow:

on:

workflow_call:

inputs:

# ...

jobs:

reusable_transform:

name: transform

runs-on: ubuntu-latest

environment: $

container: $

env:

GOODDATA_PROFILES_FILE: "$"

# ...

steps:

# ... various preparation jobs

- name: Run Transform

timeout-minutes: 15

if: $false

run: |

cd $

dbt run --profiles-dir $ --profile $ --target $ $

dbt test --profiles-dir $ --profile $ --target $

- name: Run Transform (dbt Cloud)

timeout-minutes: 15

if: $false

env:

DBT_ACCOUNT_ID: "$"

# ...

run: |

cd $

gooddata-dbt dbt_cloud_run $ --profile $ --target $

- name: Generate and Deploy GoodData models from dbt models

run: |

cd $

gooddata-dbt provision_workspaces

gooddata-dbt register_data_sources $ --profile $ --target $

gooddata-dbt deploy_ldm $ --profile $ --target $

# Invalidates GoodData caches

gooddata-dbt upload_notification --profile $ --target $

The full source code can be found here. It’s a reusable workflow triggered by other workflows for each “context” - environment(dev, staging, prod) and dbt-core/dbtCloud.

Thanks to the reusability of workflows, I can define scheduled executions simply as:

name: Extract, Load, Transform, and Analytics (Staging Schedule)

on:

schedule:

- cron: "00 4 * * *"

jobs:

elta:

name: ELTA (staging)

uses: ./.github/workflows/reusable_elta.yml

with:

ENVIRONMENT: "staging"

BRANCH_NAME: "main"

DEPLOY_ANALYTICS: "false"

secrets: inherit

Reminding you again: dbt models do not provide all the semantic properties that mature BI platforms need. For example:

- Both business title and description for Table/column names (only description is supported)

- Distinguish between attributes and facts (and so-called labels in the case of GoodData)

- Primary/foreign keys - dbt provides it only via external libs, and it’s not fully transparent

We decided to utilize meta sections in dbt models to allow developers to enter all the semantic properties. The full specification of what is possible can be found here. We are continuously extending it with new features - if you are missing anything, please do not hesitate to contact us in our Slack community.

How does it work under the hood?

The gooddata-dbt library (and the CLI) is based on our Python SDK, which can communicate with all our APIs and provide higher-level functionalities.

Regarding the dbt integration - we decided to parse profiles.yml and manifest.json files. Unfortunately, there is no higher-level “API” exposed by dbt - nothing like our Python SDK. dbt Cloud provides some related APIs, but they do not expose all the metadata we need (which is stored in the manifest.json file).

First, we read the profiles and manifest them to Python data classes. Then, we generate GoodData DataSource/Dataset data classes, which can be posted to a GoodData backend through Python SDK methods (e.g., create_or_update_data_source).

By the way - the end-to-end data pipeline also delivers analytical objects (metrics, insights/reports, dashboards) in a separate job. The gooddata-dbt CLI provides a distinct method (deploy_analytics) for it. Moreover, it provides a test_insights method that executes all insights and therefore validates that all insights are valid from a metadata consistency point of view and are executable. It would be easy to create results fixtures and test that even results are valid.

Integration with dbt Cloud

We integrate with dbt Cloud APIs as well. Gooddata-dbt plugin can trigger a job in dbt Cloud as an alternative to running dbt-core locally. It collects all necessary metadata (manifest.json, equivalent to profiles.yml), so the GoodData SDK can utilize it like it does when running dbt-core locally.

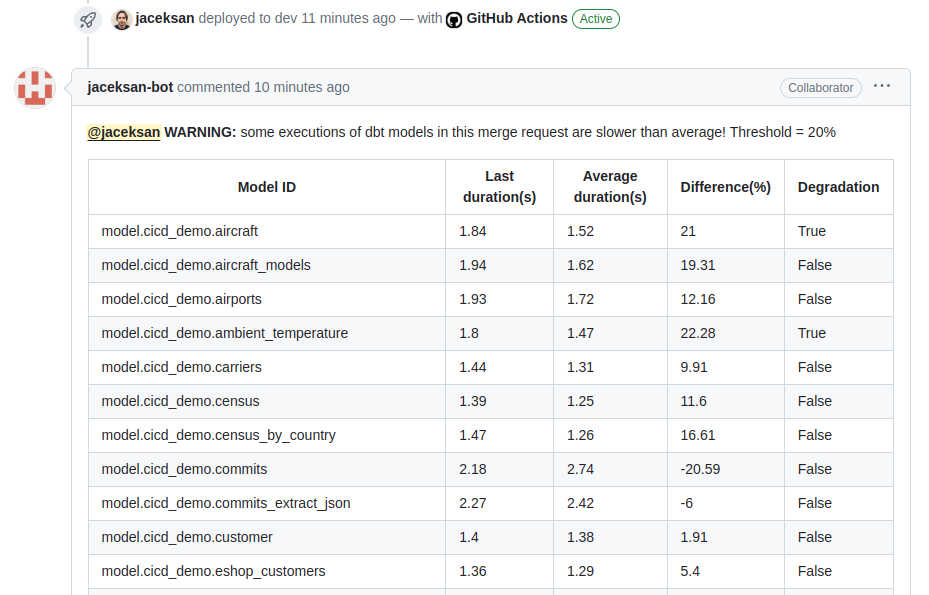

Moreover, We implemented integration with additional dbt Cloud APIs, providing information about the history of executions. We integrated it with a function that can add comments to GitLab or GitHub. The use case here is to notify developers about the performance regressions.

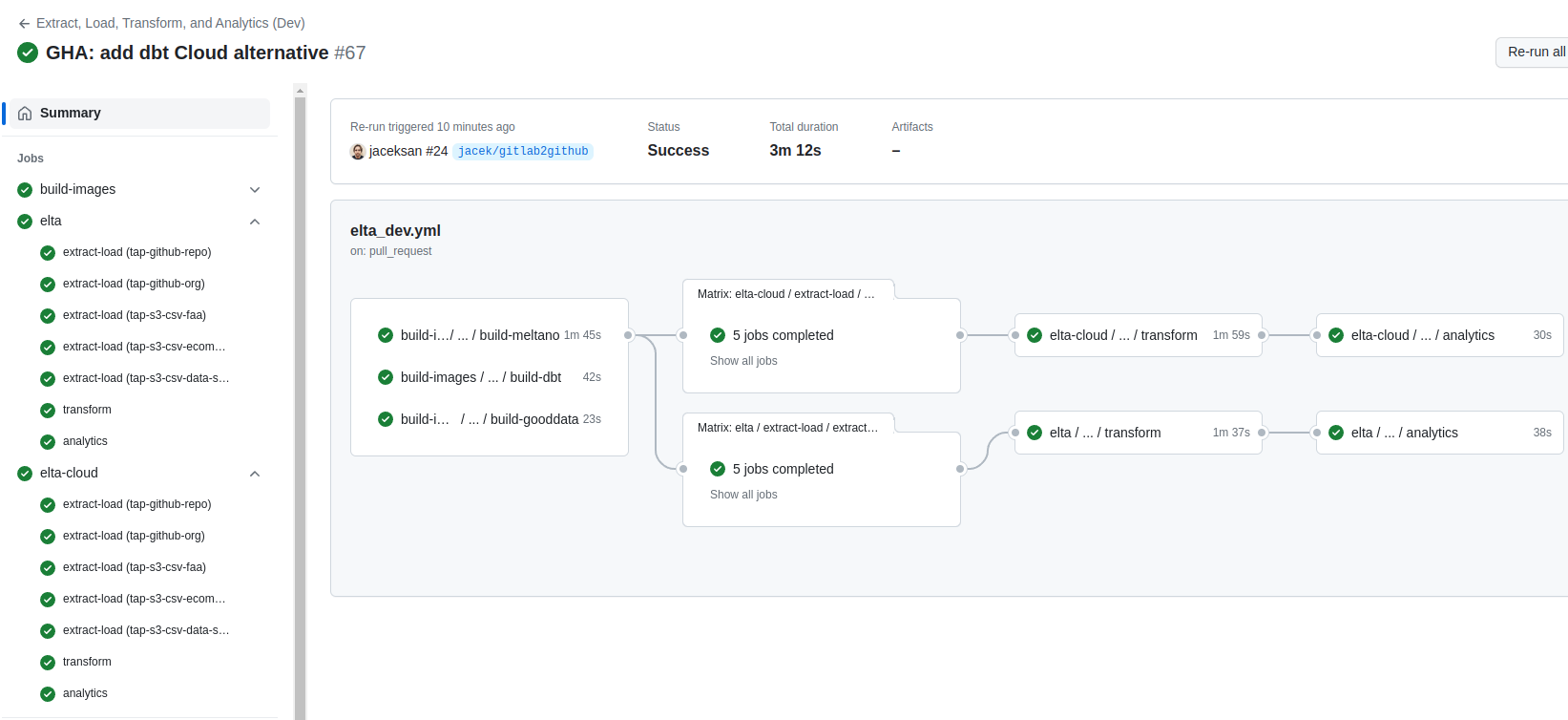

We extended the already mentioned demo already mentioned accordingly. As a side effect, there is now an alternative to the GitLab CI pipeline - the GitHub Actions pipeline. Now all steps (extract, load, transform, analytics) are running twice against different databases in a Snowflake warehouse - one with dbt-core and one with dbt Cloud:

Jobs running in GitHub actions UI. In this case, they were triggered before merge. Example pipeline for pull request Add dbt Cloud variant to staging/prod pipelines.

The notification about performance degradations is populated as a GitHub comment by my alter-ego bot:

A notification about performance degradations is populated as a GitHub comment by my alter-ego bot for each dbt model. It is just an example. We could even block the merge, integrate with Slack as well, etc.

Benefits from dbt Cloud:

- You don’t need to run dbt-core in workers of git vendors

- You don’t need to build a custom dbt docker image with a specific set of plugins

- Metadata, e.g., about executions, can be used for valuable use cases (e.g., already mentioned notifications about performance regressions)

- dbt Cloud advanced features. I like the recently released dbt Explore feature most - it provides real added value for enterprise solutions consisting of hundreds of models.

Pain points when integrating dbt Cloud:

- You have to define variables twice. For example, DB_NAME is needed not only by dbt but also by extract/load tool (Meltano, in my case) and BI tools. You are not alone in the data stack!

- No Python SDK. You have to implement wrappers on top of their APIs. They should inspire themselves by our Python SDK - provide OpenAPI spec, generate Python clients, and provide an SDK on top of that 😉

What’s next?

As already mentioned:

- Integrate all dbt Cloud APIs, and let dbt generate and execute all analytical queries (and cache results)

We are closely watching the maturity and adoption of the dbt semantic layer - Adopt additional relevant GoodData analytical features in the gooddata-dbt plugin

Also, my colleagues are intensively working on an alternative approach to the GoodData Python SDK - GoodData for VS Code. They provide not only an extension but also a CLI. The goal is the same: an as-code approach.

But the developer experience can go one level higher - imagine you can maintain dbt and GoodData code in the same IDE with complete IntelliSense and cross-platform dependencies. Imagine you delete a column from a dbt model, and all related metric/report/dashboard definitions become invalid in your IDE! Technically, we are not that far from this dream.

Try It Yourself!

In general, try our free trial.

If you are interested in trying GoodData's experimental features, please register for our Labs environment here.