Security and AI in Financial Services: Balancing Innovation with Risk Management

Summary

This article breaks down AI security for banks, fintechs, and payment providers. It covers common threats, compliance requirements, and the guardrails that prevent data leaks and untraceable decisions across AI agent workflows. You’ll also learn how to apply these controls to high-impact use cases like fraud detection, capital markets, and financial reporting. For more on AI implementation, check out our AI Transformation Playbook for Financial Services and Fintech.

Understanding AI Security Risks in Banking and Financial Services

AI security in banking involves striking a balance between the need for rapid AI adoption and the requirement to maintain strict control over customer data, financial transactions, and regulatory decisions. The biggest problems are not usually “mystery hacks.” They are basics like failing to meet security requirements, unclear data access rules, weak monitoring, and no single owner when an AI feature fails to meet expectations.

That reality is why so many generative AI efforts falter. An MIT report has put the number of pilots failing to show measurable impact at 95%. And in financial services, even a stalled pilot can create real exposure if it touches sensitive datasets, expands permissions, introduces a new vendor integration, or encourages people to use unapproved tools.

The takeaway is simple: treat AI risk management in finance like part of the product, especially if you plan to build an AI agent that queries data, drafts decisions, or triggers workflows. Create the guardrails early, including access control, logging, and approvals, so you can scale the use cases that matter without betting the bank on “we’ll fix it later.”

The Unique Security Landscape of Financial AI Systems

Financial AI systems are higher risk because a small error can turn into a real money and regulatory problem. In digital banking, being “wrong” can mean a legitimate payment gets declined, fraud gets approved, a loan is priced badly, or a compliance flag is missed and later questioned by auditors.

AI also changes how failures spread. If a control is weak, automation can repeat the same mistake at high volume before a human spots the pattern. A recent study found that U.S. bank holding companies with higher AI investment experienced greater losses tied to fraud and system failures. That is why cybersecurity in digital banking is not only about stopping attackers, but about preventing fast, silent failures inside trusted systems.

Common AI Security Threats in Financial Institutions

The most common AI security threats in financial institutions include data poisoning, adversarial prompts, model drift, weaknesses in third-party vendors, and insider misuse of AI-enabled access.

The good news is that most of these threats are predictable, which means you can plan for them and engineer them out. The key is to treat your models, data pipelines, and access paths like security-critical infrastructure, with the same discipline you apply to payment rails or customer identity systems.

Here’s a closer look at the AI security threats that show up most often in banks, fintechs, and payment providers:

- Data poisoning and model manipulation: Attackers skew training data, feedback loops, or labels to nudge outcomes in their favor.

- Adversarial attacks: Inputs are crafted to fool a model into approving, denying, or misclassifying activity.

- Model drift and degradation: Performance drops as fraud tactics, products, and customer behavior change.

- Third-party AI vendor vulnerabilities: Insecure integrations, unclear data retention, and weak access controls expand your attack surface.

- Insider threats amplified by AI access: Legitimate users can suddenly query, summarize, or extract sensitive data at speed.

- Sensitive data exposure: Private or regulated user data is sent to external AI models (e.g., LLMs) instead of remaining within controlled infrastructure, increasing the risk of leakage, retention, or misuse.

AI-powered threat detection helps, but it is not enough on its own. In regulated workflows, you also need controls that can explain decisions and prove what data was used; otherwise, a fast answer becomes a compliance risk you cannot defend.

The video below demonstrates how to safely build a fraud detection agent in a data intelligence platform:

The Challenge of Fragmented and Guarded Data

Fragmented data makes secure data sharing in financial AI systems difficult because access rules differ across teams, systems, and regions. Banks and payment providers often keep fraud, credit, operations, and support data in separate environments, including legacy platforms that were not built for model training.

Privacy and residency rules tighten the limits. Free-text fields like transaction notes and customer messages can include sensitive information and should not end up in prompts, logs, or unapproved tools.

Federated learning can reduce this friction by training on distributed data without centralizing raw records, which supports stronger financial data governance. For more information, refer to our AI Maturity Model, which illustrates how financial organizations can transition from siloed reporting to governed AI at scale.

Data Privacy and Protection in Financial AI Systems

Data privacy in financial AI systems means AI can help without exposing sensitive data. Financial data protection with AI comes down to three key principles: limiting access, controlling where data moves, and keeping sensitive fields out of prompts, logs, and outputs unless absolutely necessary.

Privacy-Preserving AI Techniques for Finance

Privacy-preserving AI techniques for finance minimize the risk of models disclosing customer data while still generating reliable insights. These are the most common building blocks for secure AI models and responsible AI practices in regulated environments:

- Differential privacy in financial analytics: Adds carefully calibrated noise to outputs so insights remain useful, but individual records cannot be reverse-engineered.

- Homomorphic encryption for sensitive data: Allows for limited computations on encrypted data, thereby protecting high-risk fields during specific analytical operations.

- Federated learning for distributed insights: Trains models across separate environments so raw data stays local while only model updates move between systems.

- Synthetic data generation for AI training: Creates statistically similar datasets that support testing and prototyping without exposing real customer records.

- Tokenization and pseudonymization strategies: Replace direct identifiers with tokens, reducing exposure in training pipelines, prompts, logs, and downstream tools.

A simple way to sanity check your approach is to ask this: if an internal user copied the model output into a ticket, an email, or a chat, would it still be safe? If the answer is not a clear ‘yes’, tighten the privacy layer before scaling.

Zero Trust Architecture in AI Deployment

Zero-trust architecture in AI means you never assume an AI system, agent, or integration is safe just because it is inside your network. Every request should be authenticated, authorized, and monitored.

For secure AI deployment:

- Apply zero-trust principles by using strong identity and access management for AI agents.

- Continuously verify behavior with logging and anomaly alerts, and micro-segment AI workloads to prevent a single compromise from spreading.

- Enforce least privilege access so models only see the data and actions needed for their task.

AI Governance and Compliance in Financial Services

AI governance in financial services is the set of policies, controls, and evidence that proves your AI systems are safe, fair, secure, and compliant. This is rarely about one law. It is about aligning multiple frameworks so the same security controls and documentation satisfy regulators, auditors, and customers in every market you operate in.

A useful way to think about regulatory AI compliance is this: privacy rules govern what data you can use and how you can use it. AI laws govern how risky systems must be built, tested, and overseen. Resilience rules govern how you prevent outages, contain incidents, and manage third parties.

The Four Pillars of AI Governance in Finance

The four pillars of AI governance in finance are observability, explainability, auditability, and accountability. These pillars make AI model behavior measurable, decisions defensible, and ownership clear.

- Observability means real-time AI model monitoring and alerting that surfaces performance drops, drift, and abnormal behavior as they happen. It should quickly show what changed, where it changed, and which products, processes, or customers were affected.

- Explainability means AI-driven insights can be understood and defended by humans. Model transparency and interpretability help teams justify outcomes to regulators, auditors, and internal reviewers, especially for high-impact decisions.

- Auditability means you can reconstruct any decision end-to-end after the fact. This requires complete data lineage, model and feature versioning, and decision trails that show which data sources, rules, and models influenced the outcome.

- Accountability means clear ownership and responsibility chains. Each model and use case needs a named owner who approves changes, sets controls, and coordinates incident response when issues appear.

Navigating Overlapping Compliance Frameworks

Navigating overlapping compliance frameworks means running one AI control program that satisfies privacy, AI-specific regulation, operational resilience, and financial reporting controls at the same time.

In practice, the fastest path is “build once, prove many times,” using a shared set of policies, logs, and evidence that each framework can reuse.

Here is how the main compliance frameworks typically show up in financial AI programs:

| Framework | What it focuses on | What it usually means for AI |

|---|---|---|

| GDPR | Personal data processing and security | Lawful basis, data minimization, access controls, retention rules, breach readiness |

| EU AI Act | Risk-based AI obligations | Classify AI by risk, add controls for high-risk systems, document and monitor models |

| DORA | ICT operational resilience | Incident response, resilience testing, third-party oversight for AI suppliers |

| GLBA (US) | Customer information safeguards | Privacy notices and security safeguards for customer data used in AI workflows |

| SOX (US) | Internal controls over reporting | Controls, evidence, and audit trails for AI affecting material reporting processes |

For a payments-specific view of what the EU AI Act could mean in practice, see this analysis of its impact on digital payments.

Establishing an AI Governance Framework

An AI governance framework in financial services defines who owns each AI system, how models are approved and monitored, and what evidence you keep for regulators and auditors.

Good governance makes AI usable in production because it turns “trust us” into documented controls, clear decisions, and repeatable oversight.

A practical governance setup usually includes:

- Board-level oversight: AI risk sits with the same leadership that owns operational risk, compliance, and reputation.

- Model risk management: Validation before release, plus ongoing monitoring for drift, performance drops, and anomalies.

- Responsible AI practices: A review group to set standards for fairness, explainability, and acceptable use.

- Third-party governance: Vendor due diligence, retention rules, access controls, and audit rights for AI suppliers.

- Evidence by default: Data sources, approvals, model versions, change logs, and decision trails captured as part of delivery, not after.

For a deeper blueprint that connects these controls to enterprise data practices, check out our whitepaper on AI governance.

Implementing Secure AI Analytics for Banks and Financial Institutions

Secure AI-powered analytics for banks means AI delivers answers from governed data that are accurate, traceable, and permissioned. In regulated workflows, the biggest risks are often quiet ones, like made-up metrics, accidental data exposure, or outputs you cannot prove later.

Machine learning in financial security can identify patterns that humans miss, but it still requires a solid foundation to stand on. The safest setups combine AI with deterministic query results, strict access controls, and audit-ready evidence you can replay when risk, compliance, or regulators ask “how did you get this number?”

The Advantage of a Deterministic Query Engine

A deterministic query engine is a system that answers questions by running a defined query on governed data, so the same question returns the same result every time. This matters in banking because leaders need numbers they can trust, repeat, and defend.

Instead of letting an AI model “make up” a metric, the engine pulls answers from approved sources using approved definitions and the user’s permissions. This makes results traceable, which is what audit teams care about when they ask, “Where did this number come from?”

It is also what makes agentic analytics safer. With an analytics catalog that lists approved metrics, business definitions, and usage guidance, an embedded AI agent can help users explore data and automate routine analysis without inventing logic. The agent can look up the correct definition, run the appropriate governed query, and stop when access or context is missing, rather than guessing.

Deterministic query engines avoid hallucination

The Role of Semantic Layers in Secure AI

A semantic layer is a governed “translation layer” that turns raw data into trusted business metrics, so every tool and every AI system speaks the same language.

The semantic layer sits between your databases and your users, helping to secure AI programs by:

- Keeping metrics consistent: The same KPI means the same thing across teams, dashboards, and AI outputs.

- Controlling who can see what: Access rules apply at the metric and dimension level, not just at the database level.

- Blocking sensitive data by default: Restricted fields and slices stay hidden unless explicitly allowed.

- Supporting safe self-service: More people can explore data without creating governance and compliance chaos.

Building Cloud Security for AI Analytics

Cloud security for AI analytics protects your cloud-based data and reporting that run on managed cloud services. It keeps the entire setup safe, ensuring the AI only sees what it is allowed to see and that you can trace what happened if something goes wrong.

In practice, teams usually secure cloud-based AI analytics using layers of controls:

- Deployment design (who can access what, anywhere): Keep identity, policies, and logging consistent across hybrid and multi-cloud so security does not change by environment.

- Data protection (keep data private in storage and transit): Encrypt data at rest and in transit, and apply masking where needed to reduce exposure.

- Sensitive data locality and AI isolation: Keep highly sensitive financial data inside approved environments and prevent it from being sent to public clouds or external LLMs. AI interactions should be mediated through governed query layers (not direct SQL on raw tables).

- Access edges (secure the entry points): Put API gateways in front of AI services to enforce authentication, authorization, rate limits, and request logging.

- Containment and recovery (limit damage, restore fast): Harden containers, segment workloads, and maintain backups and disaster recovery so you can roll back models and configs after incidents.

AI-Driven Use Cases Requiring Enhanced Security

Use cases like AI-driven fraud detection, AI for transaction monitoring, and other high-impact AI applications in financial services require enhanced security because they rely on sensitive data and can drive real financial outcomes. The right security approach depends on the use case: what the AI is allowed to do, what data it can access, and how quickly its outputs translate into action.

Traditional approach: Transaction monitoring dashboards highlighting unusual activity.

AI-enhanced approach: Fraud scenario miners analyze patterns across transactions, merchants, devices, and customer behavior to discover new fraud typologies earlier.

AI security requirements:

- Trusted signals: Integrate vetted threat intelligence feeds to ensure models use current indicators of fraudulent infrastructure.

- Explainable outputs: Provide reason codes or drivers so investigators can defend outcomes in reviews.

- Decision-grade audit trails: Log inputs used, model version, thresholds, and who approved changes for every automated action.

- Controlled tuning: Manage false positives with tested changes and approvals, not ad hoc overrides in production.

McKinsey outlines the core capabilities that strong fraud programs rely on, which can help you decide what controls your AI-driven detection needs.

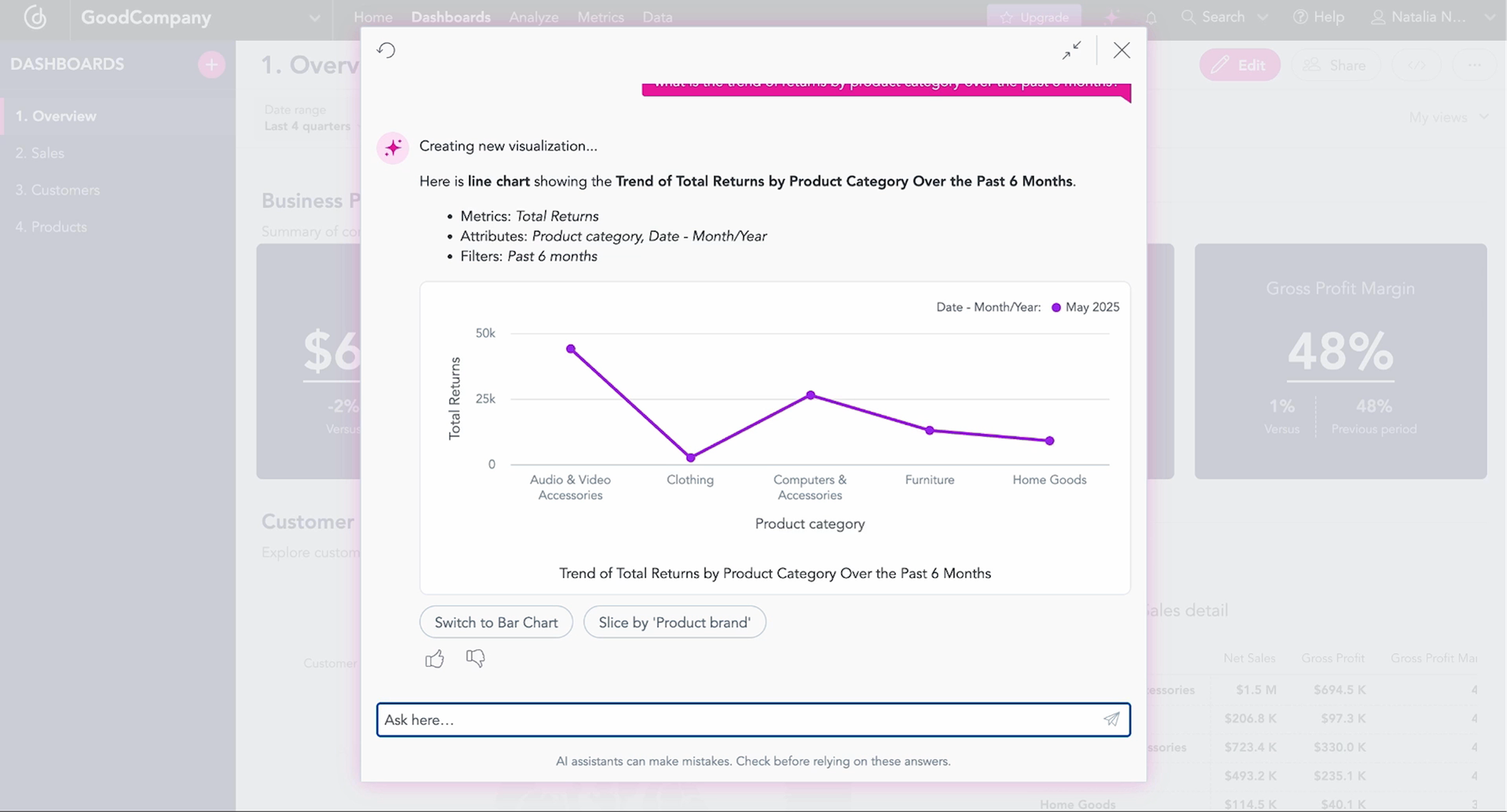

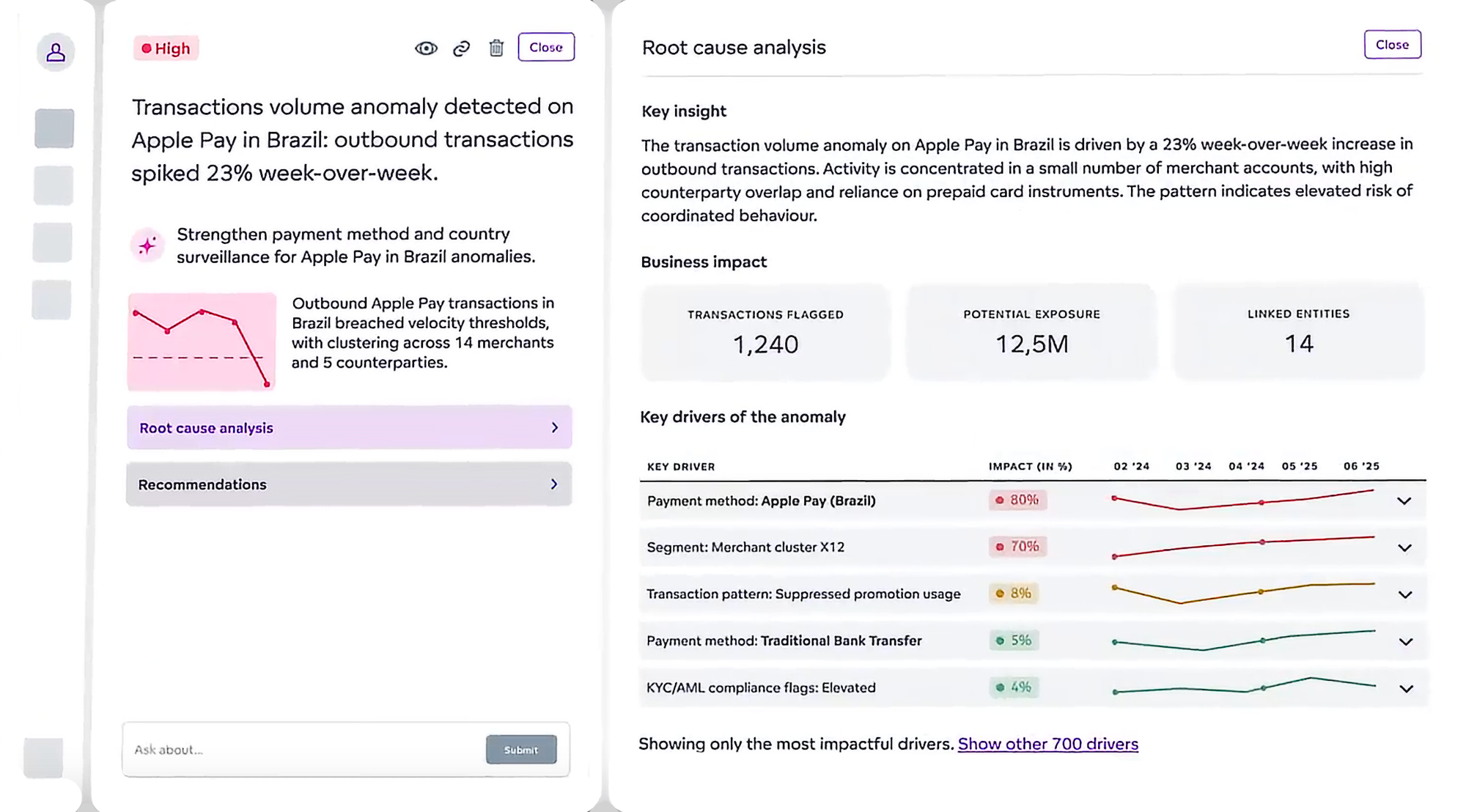

Anomaly Detection and Root Cause Analysis

Anomaly detection and root cause analysis require stronger controls because they connect data across systems and can, if you are not careful, reveal sensitive links. A good investigation tool should help analysts see patterns without accidentally exposing customer identities or confidential relationships.

Traditional approach: Corridor or velocity dashboards that flag unusual transactions.

AI-enhanced approach: Investigation agents that link related signals across accounts, devices, merchants, and locations, then draft case summaries for investigators.

AI security requirements:

- Protect customer data: Limit what the system can output, and exclude sensitive fields from prompts and summaries.

- Control cross-system linking: Use governed identifiers and strict access rules to ensure accurate and authorized entity matching.

- Make audits easy: Keep tamper-resistant investigation logs so every query, enrichment step, and conclusion can be reconstructed during audits or disputes.

Anomaly detection & root cause analytics in an agentic analytics solution

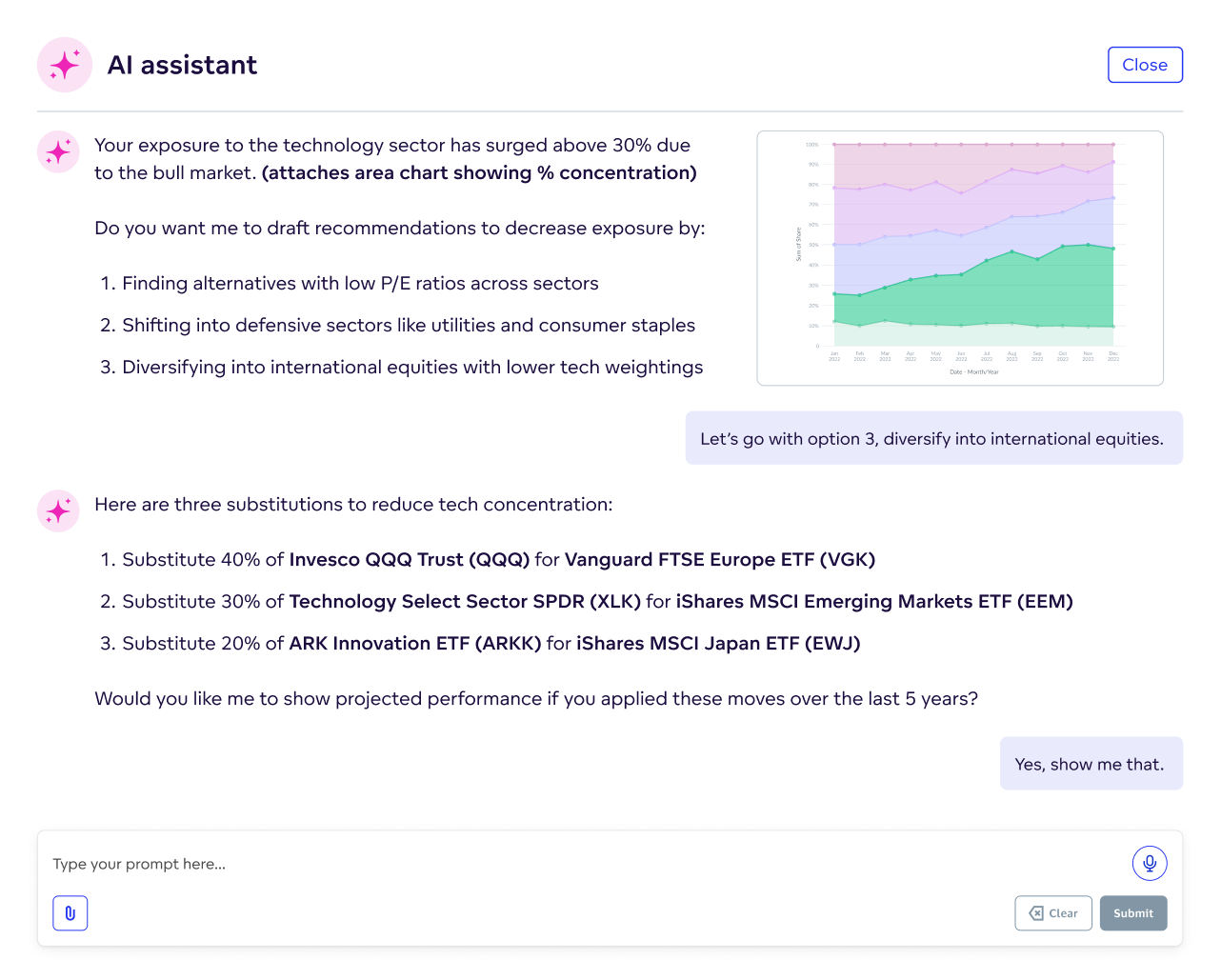

Capital Markets and Portfolio Management

This use case requires additional security because AI recommendations can significantly impact trades and risk exposure, especially as the decisions are made quickly and involve high stakes.

Traditional approach: Performance dashboards showing market movements.

AI-enhanced approach: Portfolio rebalancing agents that suggest trades while respecting mandates, tax rules, and liquidity constraints.

AI security requirements:

- Secure market data feeds: Authenticate and validate pricing and reference data, and monitor for anomalies.

- Protect proprietary strategies: Ensure prompts, outputs, and logs do not expose sensitive signals, models, or positioning.

- Fiduciary audit trails: Make every recommendation traceable to inputs, constraints, approvals, and model versions.

- Risk limits in real-time: Prevent agents from suggesting or executing actions outside defined thresholds.

Increase security with an AI assistant

Best Practices for AI Data Protection in Financial Institutions

Best practices for AI data protection in financial institutions minimize data exposure while maintaining reliable, explainable, and compliant systems. The safest approach is to build privacy and controls into development and daily operations, not bolt them on after deployment.

Secure AI Model Development and Deployment

Secure AI deployment is simplest when every model passes the same pre-release checklist before it can touch real customer data. The four essentials are: securing the pipeline, proving it works, releasing safely, and maintaining control:

- Secure pipeline: Approved data only, locked-down credentials, monitored releases.

- Prove it works: Test accuracy and edge cases before launch, not after.

- Release safely: Start small (in shadow mode or canary), then expand if the results hold.

- Stay in control: Version every model and maintain a rollback option in case performance or risk changes.

Continuous Monitoring and Threat Detection

Continuous monitoring and threat detection means treating AI like a living production system, not a one-off model you ship and forget. You track performance and data quality in real time, and you set clear thresholds for when to investigate, pause, or retrain because drift is normal in finance.

You also watch how the system is being used. Unusual query spikes, unexpected data access, or sudden bursts of automated actions can be early warning signs.

It is crucial to plan for the bad day. Your incident runbook should spell out how to freeze a model, roll back to a known-good version, preserve evidence, and communicate what happened.

Employee Training and Awareness

Employee training matters because responsible AI practices can break down when people don’t know the rules. Many organizations begin with basic AI security awareness that clarifies what data can be used, which tools are approved, and what should never be pasted into a prompt.

From there, training often becomes role-specific. Analysts typically benefit from guidance on safe querying and sharing, developers from secure AI deployment practices, and risk teams from clear review checklists aligned to AI governance in financial services.

Defenses also tend to work best when they’re exercised in practice. Tests can surface weak spots, access monitoring can reduce insider risk, and a culture of early reporting helps teams address issues while they’re still small.

The Future of AI Cybersecurity in Banking

The future of AI cybersecurity in banking is about controlling how AI systems access data and influence decisions. As banks use AI for fraud, compliance, and customer service, the risk shifts from “will someone breach our systems?” to “can an AI tool reach sensitive data, take actions, or steer outcomes faster than we can supervise?”

That is why future AI cybersecurity trends are less about one blockbuster attack and more about everyday control. Who is allowed to use the AI, what data it can see, what actions it can trigger, and how quickly you can detect and stop unusual behavior?

Regulation will keep evolving, so the most practical response is an adaptive compliance program. Keep one inventory of AI systems, one way to classify risk, and one evidence package you can reuse across audits and markets, including proof of model oversight and third-party controls.

A strong AI data intelligence platform will become a force multiplier, as it helps teams understand what data exists, who can access it, how it is used, and which metrics and definitions are trusted before AI systems are integrated into production workflows.

How GoodData Enables Secure AI Analytics for Financial Services

The GoodData platform enables secure AI analytics for financial services by providing teams with governed, auditable access to trusted metrics, allowing AI to assist in decision-making without exposing sensitive data or producing untraceable outputs.

GoodData supports agents, autopolits, co-pilots, and AI-assistants

To find out more about our secure analytics solution, watch this video on agentic AI in the financial industry. Alternatively, get a demo to explore how GoodData's platform supports the security and compliance needs banks, fintechs, and payments providers face when analytics moves from reporting into automated workflows.

FAQs About AI Security in Financial Services

Generative AI can be safe in banking when it is used with guardrails, not as a free-running decision engine. Keep genAI focused on summarizing, assisting, and drafting, while deterministic systems and controls handle final numbers and actions. Pair it with monitoring, logging, and AI-powered threat detection for misuse.

The most overlooked risk is uncontrolled access and shadow usage. Teams often approve a model but forget the surrounding reality: who can prompt it, what data it can reach, what gets logged, and which tools people use outside approved platforms. Many emerging AI threats in banking start as simple permission sprawl.

Yes, AI tools can leak data through prompts, outputs, logs, or integrations, even without a “breach.” This is why GDPR pushes minimization and access control, and why regulatory AI compliance needs evidence of protection. The safest setups mask sensitive fields and tightly control retention and sharing.

You reduce bias risk by testing for it and making decisions explainable. Track fairness metrics by customer segment, stress-test edge cases, and require sign-off when outcomes shift. Explainable AI in finance and model transparency also mean keeping reason codes, data lineage, and version history for decisions.

AI can increase exposure if it widens access, adds new vendors, or automates actions without oversight. It can also reduce fraud when used with strong controls, good data, and human review for high-impact decisions. Machine learning in financial security works best when paired with monitoring and AI-powered threat detection.

Yes, AI can support compliance by improving monitoring, documentation, and reporting, but it does not replace governance. The EU AI Act raises requirements as risk increases, and DORA focuses on operational resilience and third-party oversight. Treat compliance as a reusable control program with auditable evidence.

The ROI is faster scaling with fewer surprises. Strong controls reduce rework, shorten audit and review cycles, and prevent expensive incidents like data exposure or unexplainable decisions. Secure AI analytics for banks also makes adoption easier because leaders trust the outputs on a financial services analytics platform.

Yes, third parties are a common source of AI vulnerabilities in fintech because integrations expand your attack surface. Require clear retention rules, access controls, audit rights, and security testing for vendors. Many emerging AI threats in banking come from weak connectors, unclear logging, or poor segregation.

Accuracy changes as data and behavior change, so you need monitoring, not hope. Track drift, performance, and data quality, and use controlled retraining with approval gates. Zero trust architecture helps by enforcing least privilege and continuous verification, so changes do not silently degrade safety.

AI improves data security in financial services by detecting anomalies and fraud patterns faster, prioritizing security signals, and scaling monitoring across systems. It also helps prevent leakage when paired with access control, redaction, and logging, especially in AI agent workflows where tools and data access can expand risk quickly.

Leaders should look for proof, not reassurance. You want an inventory of AI systems, clear owners, access controls, monitoring, and incident playbooks, plus evidence you can show in an audit. If you cannot explain outputs and trace them to governed data, model transparency is not strong enough yet.

Examples include of secure AI analytics platforms include GoodData (governed semantic layer and “metadata-only” AI querying for safer, controlled analytics), Microsoft Fabric/Power BI with Copilot (documented privacy and security controls for Copilot within Fabric), Databricks (Unity Catalog governance plus Mosaic AI Gateway controls for secure model access and guardrails), and ThoughtSpot (Spotter/agentic features with documented security and semantic-layer foundations).