Analytics Inside Virtual Reality: Too Soon?

Have you ever thought about doing analytics in virtual, augmented, or mixed reality? What benefits could it bring to end users? We explored potential use cases for this technology in the data analytics space and implemented a working demo during the latest GoodData hackathon. In this article, I will briefly cover what we did, as well as link you to the demo application and its source code.

First, Let Me Ask You for Feedback

Recently I presented a short version of this article on the annual DATA mesh meetup and asked for the following feedback:

The LinkedIn poll — will be open for the next two weeks, please, vote!

I was quite surprised how many people had a non-gaming experience with not only virtual but also augmented and mixed reality! So many people approached me during the after-party to discuss potential use cases.

The Results First

To be honest, we strongly doubted that we would be able to deliver anything functional, but we did! The video demonstrates what we achieved. Try it in your VR headset (or at least in any web browser).

Hint: look around to find the data visualization.

The next chapters describe what use cases we discovered, how we implemented the result, and what pros and cons we found in the area of VR development for data analytics.

The Story Behind This Endeavour

As an analytics provider emphasizing the Headless BI concept, we always seek new data consumption use cases.

So far we more or less touched on the following:

- Dashboarding + ad hoc analytics (drag-and-drop experience for end users).

- Embedding into custom business applications.

- ML and AI, including notebooks like Deepnote/Hex. We published an article about how to maintain ML-Analytics cycle.

- Communicators like Slack or Teams. We published an article about integration with Slack and ChatGPT.

Like every year, the time came for yet another hackathon in GoodData. This time around, I wanted to explore something completely new, something we hadn’t touched at all yet.

I fell in love with Oculus Quest (both one and two, waiting for three). Besides games, I also played with it to try and implement something meaningful. So this year’s topic for the hackathon was clear. However, I am an expert in the area of databases and the backend, so I recruited Dan Homola, our famous front-end architect, to help me. Lucky me!

Why not try our 30-day free trial?

Fully managed, API-first analytics platform. Get instant access — no installation or credit card required.

Get startedDiscovery First!

This was the funniest part of the hackathon. We were talking hours about potential use cases. Neither I nor Dan is an expert UX designer — we should follow up on that later with correspondingly skilled people! Anyway, we came up with the following ideas.

Leverage 3D Space

The already known types of 3D data visualizations are obvious candidates. However, we feel there is more potential in the VR/MR/AR space, where you can move and interact in more ways than just interacting in a web browser.

Interactivity

There are so many use cases! Various data filters, building 3D visualizations with a drag-and-drop experience.

We were discussing one particular very interesting use case — the interaction between semantic models and visualizations. In browsers, the semantic model is usually represented as a flat (sometimes hierarchical) list of entities (attributes, facts, metrics, …). Imagine what you could achieve in a 3D space! Visualize a physical/logical data model in a 3D space, and drag and drop entities from it, while always being able to see the surrounding context.

Moreover, GoodData provides a unique feature: filtering the list of valid entities based on the list of entities already used in the visualization. Imagine how this filtering could be visualized in 3D, where the still-valid part of the 3D model would be highlighted based on relationships among entities.

Advanced Visualizations

Again, so many options. We explored resources like Plotly 3D charts. An obvious candidate is any kind of spatial analytics, like Google Earth on steroids.

Speech-To-Text

The obvious use case for the VR (or AR) headset.

Regarding analytics, we recently published an article describing an integration of analytics with Slack that leverages natural language query (NLQ). What if headset users could ask for a report with their voice, using natural language? Under the hood, the request would be transformed into an internal report definition using properly pre-trained Large Language Models (LLM). Then the internal report definition would be sent to the backend, and the corresponding visualization would be rendered in virtual reality. There are other use cases, like exploring semantic models.

Augmented/Mixed Reality

Personally, I consider this option the most promising one.

We are close to AR headsets, which are lightweight enough and with batteries that last for the whole day. Or at least it looks like we are not that far from it. Once the price of such glasses is similar to the current price of Oculus Quest, I expect their massive expansion: AR headsets could replace mobile phones.

Being ready to embed analytics into various AR products will give you a competitive advantage. Personally, we were thinking about implementing something like Tinder++, or at least detecting people in our office and displaying their personal analytics based on data we have in our internal systems. I know, too ambitious, but definitely thought-provoking (and scary, I recommend reading the book Zero by Marc Elsberg).

Prototyping

Anyway, we are ready. With just a few lines of code (hackathon quality, please, be forgiving), you can collect report results with our GoodData UI.SDK and embed it into VR.

Regarding developer velocity with devices like Oculus:

- We lost a lot of time trying to use the Codesandbox service. Refreshing the (publicly available) application wasn’t deterministic, often we saw the old version again and again.

- We finally connected Oculus to our internal network, and directly to my laptop, where I started the application locally (and regularly git-rebased it to adopt Dan’s changes).

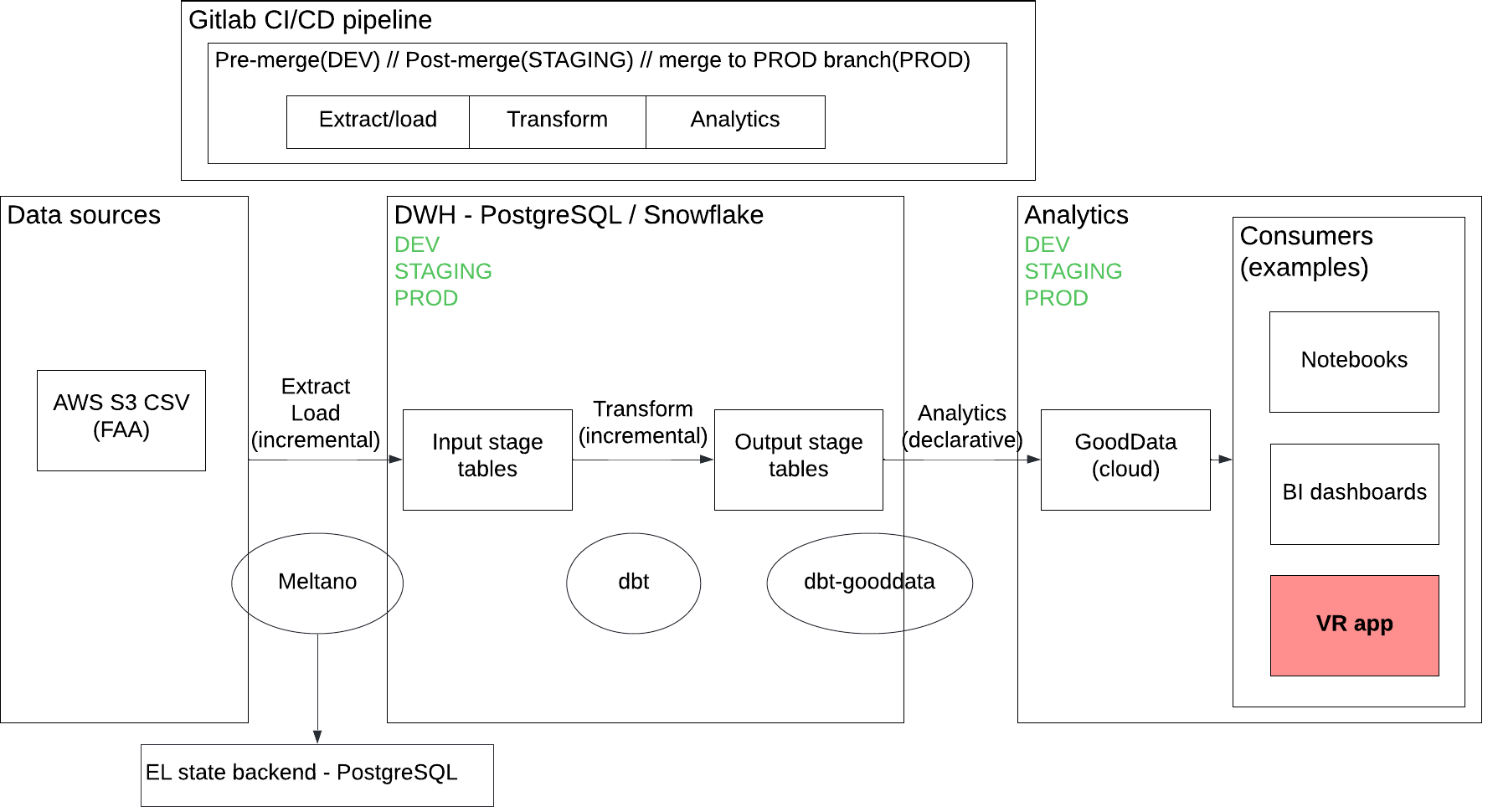

Regarding CI/CD: I decided to encapsulate the VR application to an existing CI/CD data pipeline (more on that e.g. in Extending CI/CD data pipeline with Meltano).

Conclusion: with a single merge request you can extend the data pipeline and the (VR) application and you can consistently deliver it to various environments.

Frontend

We found only one relevant (open source) framework, which we could use to deliver at least something in under 24 hours: A-FRAME. A-FRAME provides a WebVR (browser) experience.

They provide quite good documentation, though it is sometimes slightly misleading.

Unfortunately, their SDK aframe-react seems to be no longer under active development, and it is not working properly. So we had to do everything with pure HTML and Javascript.

Basically, developers can generate A-FRAME HTML-like entities representing various types of objects in 3D space. It was fairly easy to generate data points as spheres positioned based on underlying data, with their size and color also being derived from the data. Luckily, I found a source code implementing thumbstick movement as an alternative to physical movement (which still makes sense with Oculus Quest all-in-one devices).

The application is a part of the gooddata-data-pipeline repository and is consistently delivered to two separate environments with the free-forever Render service (really easy to set up!):

- vr_analytics_stg when a change is merged to the main branch. Running on top of the GoodData staging environment (workspace).

- vr_analytics when a change is merged to the prod branch. Running on top of the GoodData production environment (workspace).

You can find the complete source code here.

Backend

This part was too easy. I reused our public CI/CD data pipeline demo.

I decided to use FAA (Federal Aviation Administration) data about flights in the United States. The data is available in our AWS S3 public bucket in the form of CSV files. Adding AWS S3 CSV as a new source of Meltano was just about adding a few lines in the meltano.yml file. Then I wrote a few dbt SQL models to transform the data into a model that was ready for analytics.

The GoodData logical data model is generated from dbt models (dbt-gooddata plugin), so no work was required there. Finally, I had to create a report suitable to be visualized in 3D space. I decided to slice various metrics by two date granularities — quarter/year and day of week visualized as X/Y axis. The idea was to analyze the overall history of flights AND per day in the week. The Z axis, radius, and color of each data point depend on metric values.

Conclusion

The Good

It’s amazing what it is possible to deliver in under 24 hours!

The Bad

A-FRAME is still not mature enough. However, it is actively developed, so we can expect a better experience in the future. Anyway, for a serious production-ready project, I would search for a more mature framework, maybe something like Unity.

And the Ugly

What really hampered us initially, was the poor developer velocity in the beginning caused by our trying to use CodeSandbox.

We were finally able to improve it by connecting the Oculus headset to my local laptop through our internal network because the CodeSandbox service did not work for us.

… and by the way, we won! 🙌

Want To Try It for Yourself?

If you want to put any of these principles to work, why not register for the free GoodData trial and try applying them yourself?

Why not try our 30-day free trial?

Fully managed, API-first analytics platform. Get instant access — no installation or credit card required.

Get started