Make Key Driver Analysis Smarter with Automation

What is Key Driver Analysis, and why should you care?

Key Driver Analysis (KDA) identifies the primary factors that influence changes in your data, enabling informed and timely decisions. Imagine managing an ice cream shop: if your suppliers' ice cream prices spike unexpectedly, you'd want to quickly pinpoint the reasons. Be it rising milk costs, chocolate shortages, or external market factors.

Traditional KDA can compute what drove the changes in the data. And while it provides valuable insights, it often arrives too late, delaying critical decisions. Why? Because KDA traditionally involves extensive statistical analysis, which can be resource-intensive and slow.

Automation transforms this scenario by streamlining the process and bringing KDA into your decision-making much faster through modern analytical tools.

Why Bring KDA Closer to Your Decisions?

Consider the ice cream shop scenario: one morning, your supply of vanilla ice cream spikes by 63%. A manual KDA might reveal—hours or even days later, depending on when it's run (or whether someone remembers to check the dashboard)—that milk and chocolate prices have surged, leaving you scrambling for solutions in the meantime.

Automating this process through real-time alerts ensures you never miss crucial events:

- Webhook triggers when ingredient prices exceed defined thresholds.

- Immediate automated KDA execution identifies critical drivers within moments.

- Instant alerts enable swift actions like sourcing alternative suppliers or adjusting prices, safeguarding your business agility.

These systems can significantly reduce your response times, allowing you to mitigate risks and leverage opportunities immediately, rather than reacting post-mortem.

Automating KDA

Automation significantly streamlines the KDA process. Sometimes, you don’t need to react to alerts immediately, but you need your answers by the next morning. Let's explore how you can set this up using a practical example with Python for overnight jobs:

def get_notifications(self, workspace_id: str) -> list[Notification]:

params = {

"workspaceId": workspace_id,

"size": 1000,

}

res = requests.get(

f"{self.host}/api/v1/actions/notifications",

headers={"Authorization": f"Bearer {self.token}"},

params=params,

)

res.raise_for_status()

ResponseModel = ResponseEnvelope[list[Notification]]

parsed = ResponseModel.model_validate(res.json())

return parsed.data

For this example, I have deliberately chosen 1000 as the polling size for notifications. In case you have more than 1000 notifications on a single workspace each day, you might want to reconsider your alerting rules. Or you might greatly benefit from things like Anomaly Detection, which I touch on in the last section.

This simply retrieves all notifications for a given workspace, allowing you to run KDA selectively during the night. This saves your computation resources and helps you focus only on relevant events in your data.

Alternatively, you can also automate the processing of the notifications with webhooks or our PySDK, so you don’t have to poll them proactively. You can easily just react to them and have your KDA computed as soon as any outlier in your data is detected.

Automated KDA in GoodData

While we are currently working on integrated Key Driver Analysis as an internal feature, we already have a working flow that elegantly automates this externally. Let’s have a look at the details. If you’d like to learn more or want to try to implement it yourself, feel free to reach out!

Every time a configured alert in GoodData is triggered, it initiates the KDA workflow (through a webhook). The workflow operates in multiple steps:

- Data Extraction

- Semantic Model Integration

- Work Separation

- Partial Summarization

- External Drivers

- Final Summarization

Data Extraction + Semantic Model integration

First, it extracts information about the metric and filters involved in the alert, including the value that triggered the notification, and then it reads the related semantic models using the PySDK.

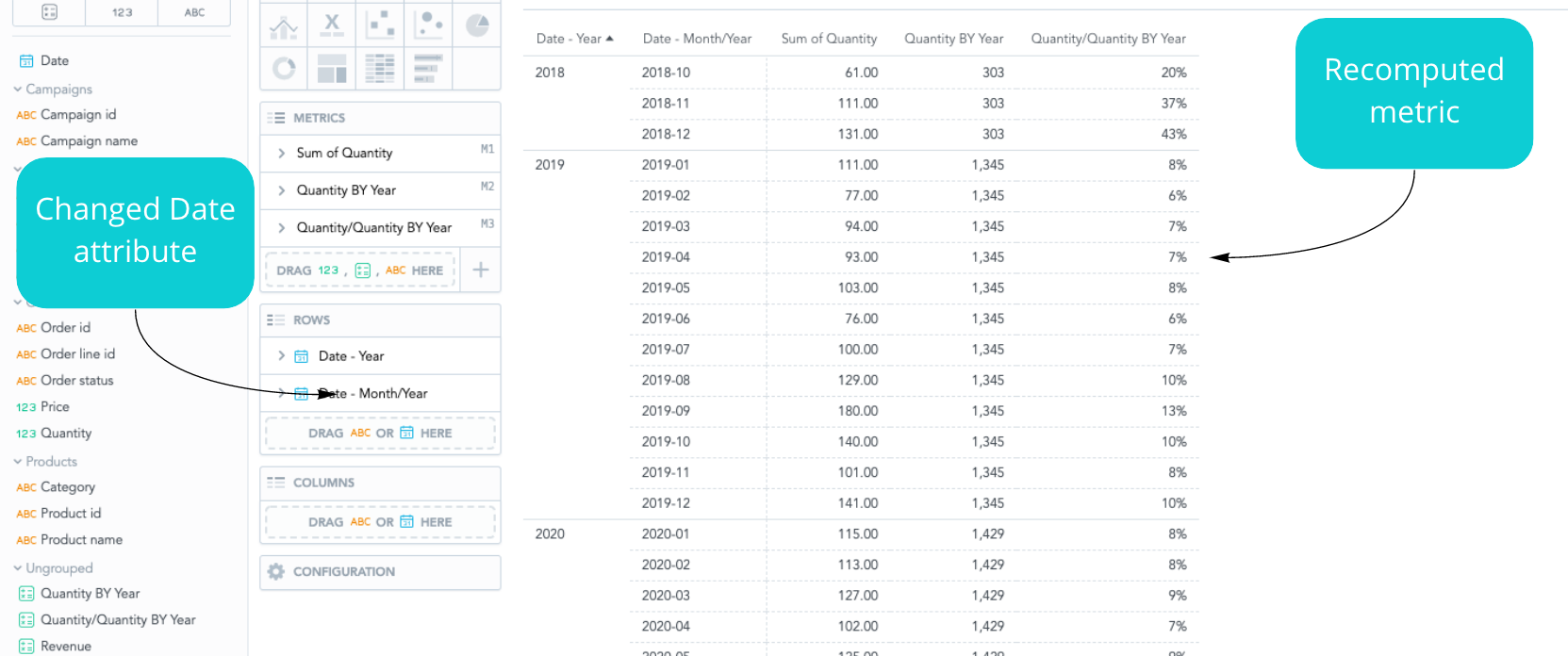

The analysis planner then prepares an analysis plan based on the priority of dimensions available in the semantic model. This plan defines which dimensions and combinations will be used to analyze the metric.

Setting up the Work

The analysis planner then initiates analysis workers that execute the plan in parallel. Each worker uses the plan to query data and perform its assigned analyses. These analyses produce signals that the worker evaluates for potential drivers (what drives the change in the data).

Partial Summarization

If any drivers are found, they are passed to LLM, which selects the most relevant ones based on past user feedback. It also generates a summary, provides recommendations, and checks for external events that could be related.

External Drivers

The analysis workers process the plan starting from the most important dimension combinations and continue until all combinations are analyzed or the allocated analysis credits are used up. The credit system is something we implemented to allow users to assign a specific amount of credits to each KDA in order to manage the duration and cost of the analysis/LLMs.

Final Summarization

Once the analyses are completed, a post-processing step organizes the root causes into a hierarchical tree for easier exploration and understanding of nested drivers. The LLM then generates an executive summary that highlights the most important findings.

We are currently working on enhancing KDA using the semantic model of the metrics. This will help identify root causes based on combinations of underlying dimensions and related metrics. For example, a decline in ice-cream margins may be caused by an increase in the milk price

Experience GoodData in Action

Discover how our platform brings data, analytics, and AI together — through interactive product walkthroughs.

Explore product toursA Sneak Peek Into the Future

Currently, there are three very promising technologies that we are experimenting with.

FlexConnect: Enhancing KDA with External APIs

Expanding automated KDA further, FlexConnect integrates external data through APIs, providing additional layers of context. Imagine an ice cream shop’s data extended with external market trends, consumer behavior analytics, or global commodity price indexes.

This integration allows deeper insights beyond internal data limitations. This can make your decision-making process more robust and future-proof. For instance, connecting to a weather API could proactively predict ingredient price fluctuations based on forecasted agricultural impacts.

Enhanced Anomaly Detection

Integrated machine learning models that highlight significant outliers, improving signal-to-noise ratios and accuracy. This would mean that you can easily move beyond simple thresholds and/or change gates. Your alerts can take into account the seasonality of your data and simply adapt to it.

Chatbot Integration

We are currently expanding the possibilities for our AI chatbot, which, of course, includes Key Driver Analysis. Soon, with this capability, the chatbot can help you set up alerts for automatic detection of outliers and send you notifications about them. Also, in the future, it may recommend you next steps based on KDA.

The output could look something like this:

Practical Application: Ice Cream Shop Example

To illustrate, assume your Anomaly Detection detects a price deviation. Immediately:

- An automated KDA process initiates, revealing milk shortages as the primary driver.

- Simultaneously, FlexConnect fetches external market data, showing a global dairy shortage due to weather conditions.

- An AI agent notifies you via instant messaging (or e-mail), offering alternative suppliers or recommending price adjustments based on historical data.

- You can then chat with this agent and reveal even more information (or ask it to use additional data) on the anomaly. The agent has the whole context, as he has been briefed even before you knew about the anomaly.

And while this might sound like a very distant future, we are currently experimenting with each of these! Don’t worry, when each of these features is nearing deployment, we’ll share the PoC with you on this.

Want to learn more?

If you’d like to dig deeper into automation in analytics, check out our article on how to effectively utilize Scheduled Exports & Data Exports. It explores how to use automation to set up alerts correctly, so that they are useful and not simply a distraction.

Stay tuned if you’re interested in learning more about KDA, as we’ll soon follow up with a more in-depth article while also exploring its practical application in analytics.

Have questions or want to implement automated KDA in your workflow? Reach out — we're here to help!