Hyper personalised analytics is coming

Two years ago, I interviewed a candidate for a UX designer position for our web and marketing team. During the interview, he brought up hyper-personalization as the next big thing in digital marketing. It sounded interesting, but I didn’t give it much thought at the time. Now that this trend is coming to analytics, the current tools aren’t ready for it.

Most of the analytics solutions are still stuck in the pattern of a standardized set of dashboards which rule all of the consumers. To add an impression of personalisation, these standard dashboards are often accompanied with dozens of filters. This however only adds to their complexity. Making them incomprehensible at first glance.

Politely said, the current personalisation experience in analytics is suboptimal. But how to get out of this?

Moonshot: Dashboards are dead

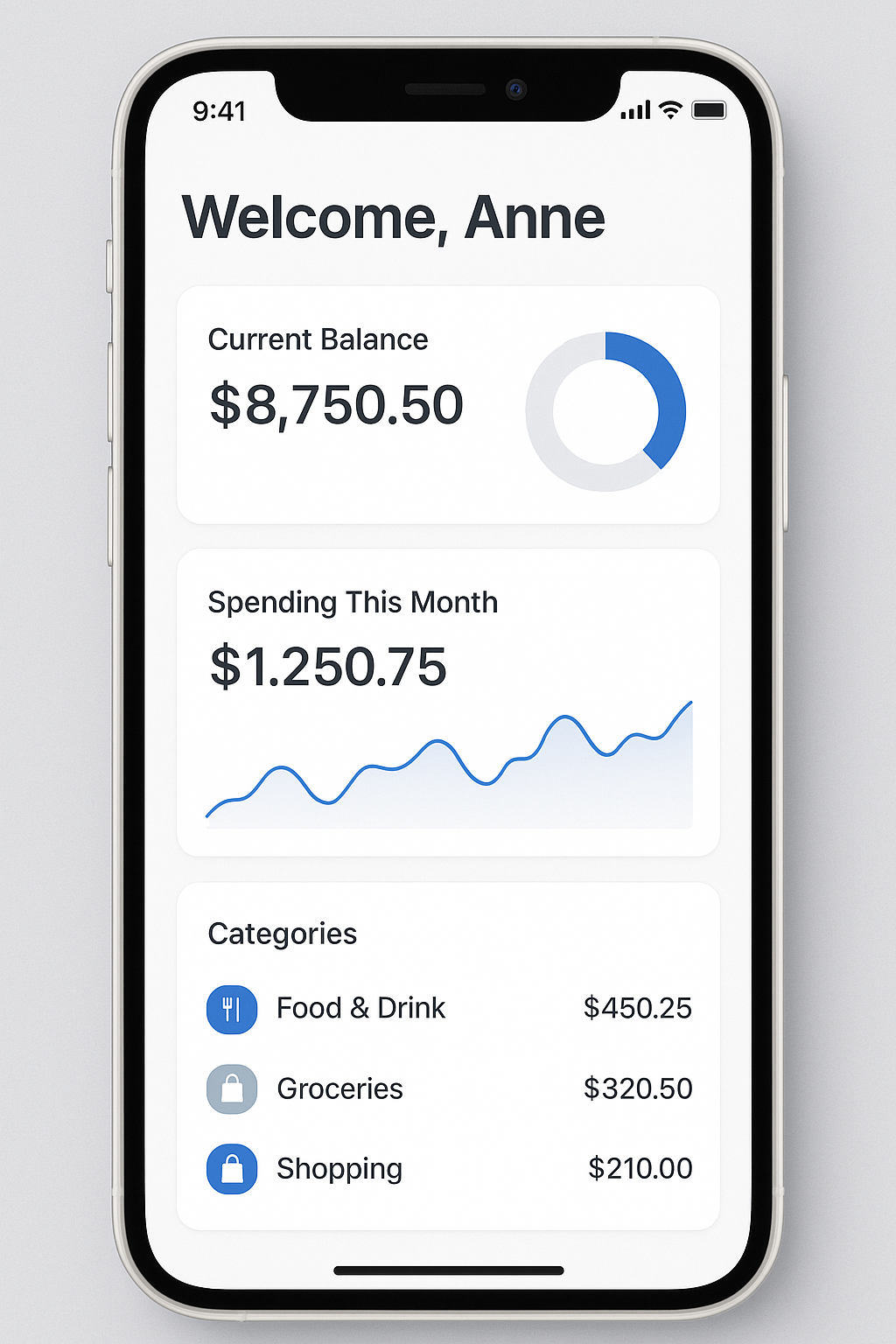

I know we have been hearing about the dashboards being dead at least since 2020, yet they are still here with us. However, if hyper-personalization is really coming, dashboards are not the ideal interface for it. They should evolve into personal spaces or homepages.

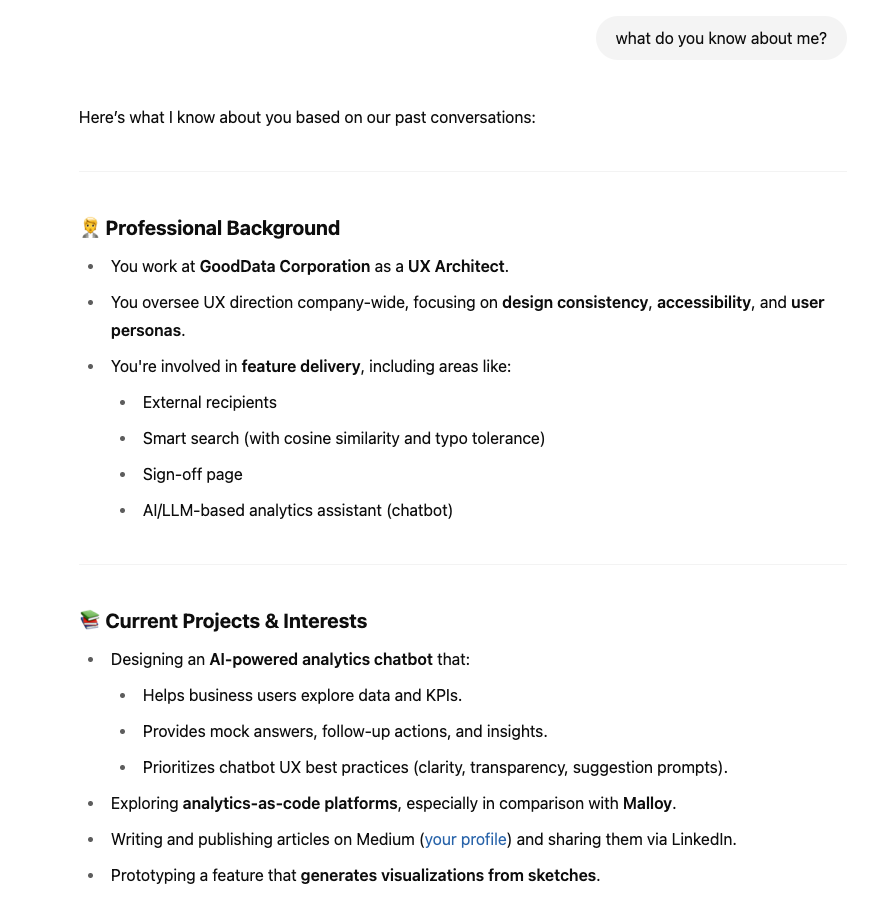

Another option that nicely matches with hyper-personalizations are AI chatbots and conversational patterns in general. Surprisingly, chatbots already know a lot about us, just ask them yourselves.

Now imagine connecting the information chatbot knows about you, to your data needs.

However, I haven’t seen a single analytics platform that would be able to deliver such an experience. Even tech giants like Apple are still struggling to adopt and integrate new AI-driven features. And building this from scratch would require a tremendous amount of work.

Realistic goal: Personalized dashboards

As I said at the beginning, hyper-personalization is a tempting goal, however, most of the analytics solutions have close to zero personalized content. This is not because data analysts are villains wanting their dashboard users to suffer, but they just don’t have enough time and resources to manage personalized versions of their dashboards for everyone.

I believe AI can help us personalize analytics.

How exactly can AI help us here? In my article Can your BI tool import sketched dashboards? I outlined how GoodData professional services work. They look at the data and cooperate with our customers to deliver the best possible analytics for their desired use cases. AI can help us bootstrap this process.

Let’s look at three steps AI can help us with personalized dashboards.

1. Propose audience based on the data

While the common knowledge is to start with the audience and their use cases before building data around it, reality often flips this approach — we start with the data and then we seek the right audience. This is precisely where AI can help us.

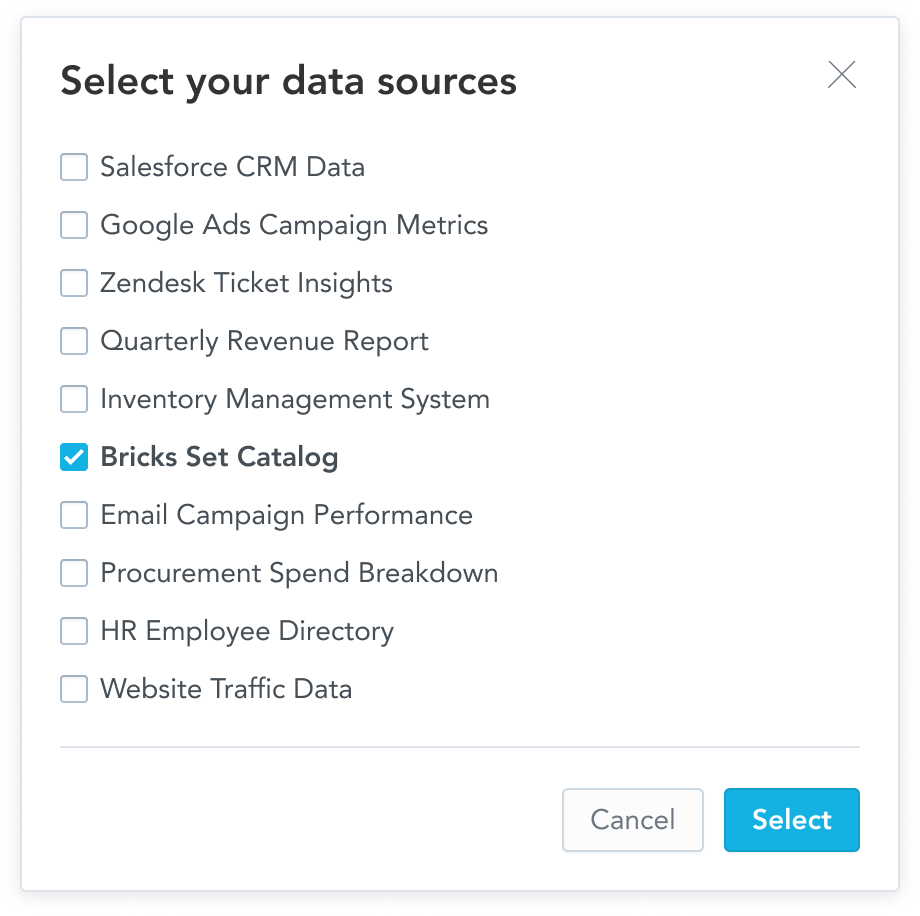

Here is a demo explaining the idea. First, I select data sources and LLM will analyze their metadata to identify the best possible audience and use cases for it.

Would you dare connect your database to LLM directly? How would your security and compliance department feel about it? Doing this through GoodData has one big advantage — you don’t connect your data to the LLM, just the tables and column names.

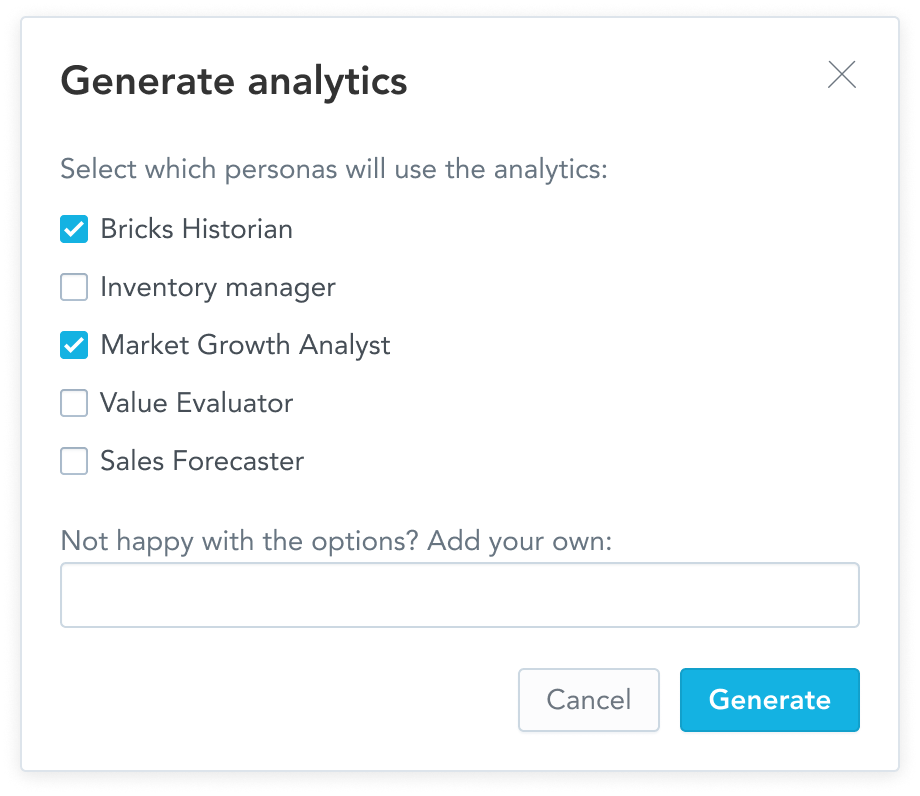

In this step, AI creates a list of personas and their use cases based on the data, which we will use in the next step, where we create the model.

2. Generate audience-based model

Now with the proposed audience, we are ready to generate a logical data model. A robust model is the very heart of a modern AI-ready analytics platform. And it is also the part where data analysts and analytics engineers spend most of their time. Some tools allow you to generate the model as a 1:1 copy from the database tables.

How much can such a copy of physical data structures serve your analytics needs? The only exception is if you already prepare your analytics model directly in the database. In such a case you spend most of your time creating the very same logical model just in a different tool.

AI can bootstrap the model creation by taking the audience and their use cases into account. Any information you can provide to the AI can help your model become a better fit.

datasets = ask_ai_to_create_model(tables, personas)

datasets_directory = os.path.join('analytics', 'datasets')

if os.path.exists(datasets_directory):

shutil.rmtree(datasets_directory)

os.makedirs(datasets_directory, exist_ok=True)

for dataset in datasets:

create_dataset(str(dataset))

3. Generate audience-oriented dashboards

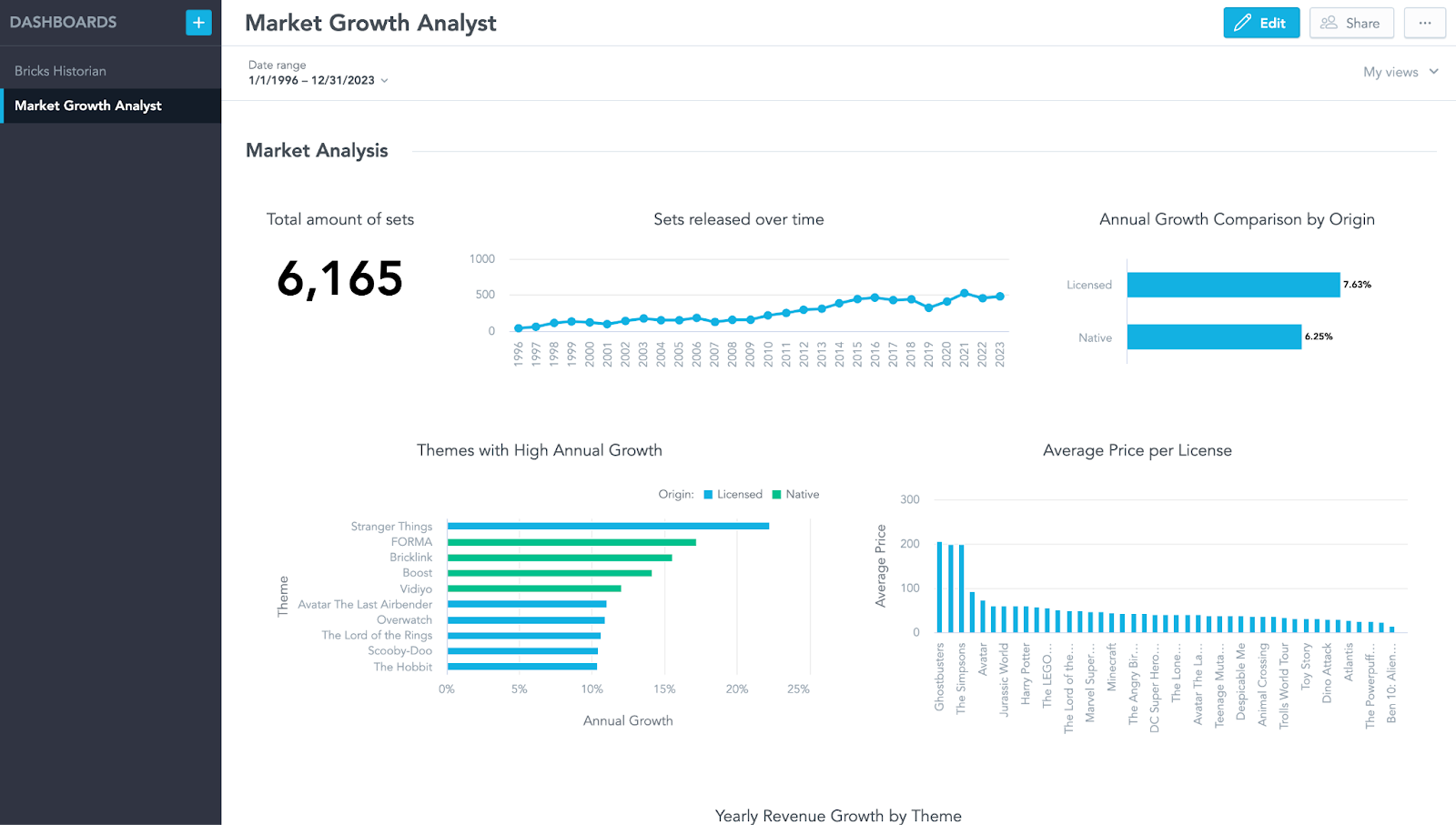

With the logical data model and audience in place, we can go a bit further and generate personalised dashboards and visualizations.

personas_with_vizs = ask_ai_to_create_visualizations(list_of_personas)

for persona in personas_with_vizs:

# Create a directory for each persona's title

directory = os.path.join(visualisations_directory, persona['title'])

os.makedirs(directory, exist_ok=True)

# Iterate over the visualizations for each persona

for viz in persona['visualisations']:

create_visualization(str(viz), directory, False)

dashboards = ask_ai_to_create_dashboards(personas_with_vizs)

for dashboard in dashboards:

create_dashboard(str(dashboard), False)

deploy_analytics()

Not super happy about the outcome? No problem, all the assets are clearly separated. Feel free to update/create any of the steps manually or even better let the AI try it again with more specific instructions and more information.

Try it yourself

In the repository, you can find the whole code, including large parts of the prompts. It is very simple, yet elegant, because chatbot does most of the heavy lifting.

You might ask yourself, why would you even want to use GoodData, if even light prompting can lead to such results. And the reason why is exactly that, you only need light prompts, because our very AI friendly analytics as code approach.

As you can see in the presented code, we use OpenAIs’ GPT-4o for the first step, which is the only step where you want the AI to be creative. Then we use o3-mini, because it is basically generating code, as in GoodData you can represent anything as code.

*Disclaimer: In the code, we omitted parts that mimic the chatbot of GoodData, so it will not work as seamlessly as in our testing. We also omitted parts of the prompts, and shortened them for demonstration purposes.*

Conclusion

Although the future is bright and hyper personalized analytics is soon™ coming through conversational interfaces or even AI workflows, there is something we can do about personalisation even now. AI can help us to generate personalized analytics, so data analysts don’t need to start from scratch.