Data Dream Team: Meltano, dbt, Python and GoodData for VS Code

Written by Andrii Chumak |

Recently I’ve created a dashboard about Pluto Day and in this article I would like to walk you through the technical details of it. The PoC was built on top of two of our Analytics as Code tools: GoodData for VS Code, Python SDK In this article you’ll read about my PoC outcomes and will have a glimpse on what lies ahead for GoodData’s Analytics as Code.

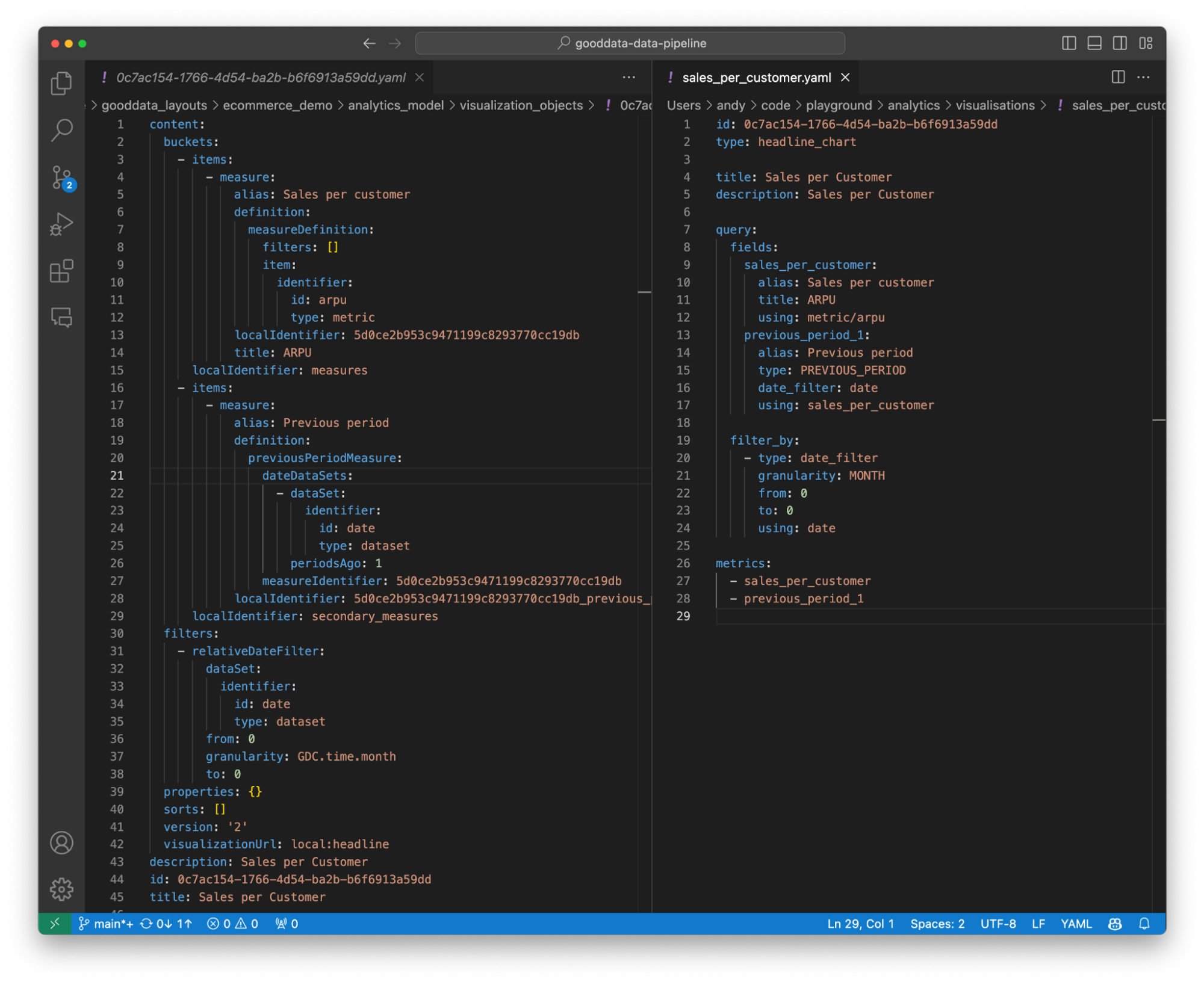

GoodData for VS Code has gone a long way since our first beta release last year. You can now define a complete analytical layer of your data product - from logical data model, to metrics, to visualizations, and even dashboards - all in code, with versioning, autocomplete, real-time static validation, and preview.

GoodData for VS Code is not without its limitations, though. It covers only the analytical layer of a data product , overlooking areas like user management, workspace hierarchy, data source definition, and data filters. And that’s where GoodData Python SDK comes in to save the day.

One might wonder, why not use only Python SDK, as it has Declarative (a.k.a. Layout) API, after all? That API makes it super easy to move analytics between workspaces, propagate users dynamically and generally handle your project lifecycle. However, it does not help readability, let alone writability of declarative YAML files.

On top of developer convenience, GoodData for VS Code runs static validation for the whole workspace before each deployment. This ensures that all analytical objects, such as your latest fancy chart, are checked for integrity, preventing issues like referencing a metric your colleague might have removed in another git branch. This process ensures your analytics are always accurate and dependable - ensuring your users can trust the data!

Project setup

First, let’s set the scene. I was looking for some juicy data I could use to test our end-to-end data pipeline with GoodData for VS Code. Then I remembered seeing the NASA JPL database on Solar System bodies. Luckily, Pluto Day was coming up on February 18th, so the topic choice was a no-brainer. You can read an article I’ve prepared about Pluto and our Solar System.

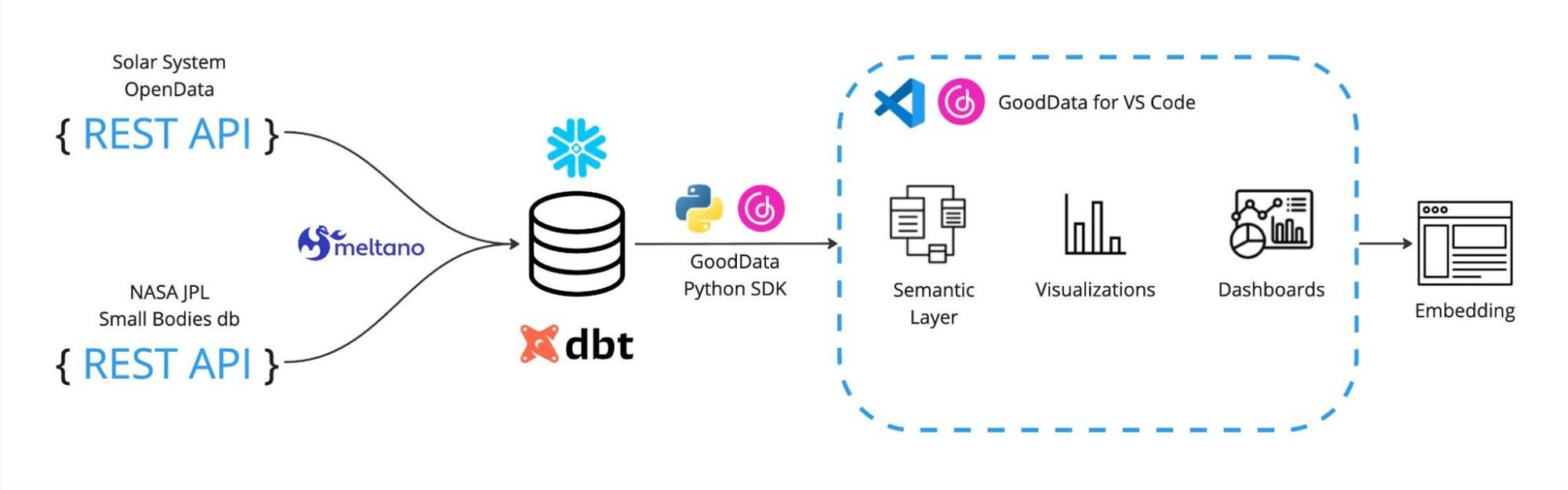

As a backbone for the project I’m using Meltano. It simplifies data fetching from REST API and executing subsequent transformations with dbt. GoodData for VS Code is used to define visualizations and dashboards, while Python SDK helps with data source provisioning. Finally, the public demo is a simple static HTML page, with dashboard embedded as GoodData’s WebComponents.

Data ingestion

I’ve used two publicly available REST APIs to fetch the data.

For large celestial bodies (the Sun, planets, dwarf planets and moons), I tapped into The Solar System OpenData website. Its REST API is well-structured and fetching the data was a matter of configuring `tap-rest-api-msdk` in `meltano.yaml` and providing a JSON Schema.

For smaller celestial bodies, I’ve used the aforementioned NASA JPL database. The setup was more complicated here; the data provided by the API is not Meltano-friendly. I had to write a custom tap to transform the received data into a more digestible format.

// Data from API

{

headers: ["id", "name", "mass", ...],

data: [

["1", "Sun", "1.9891e30", ...],

["2", "Earth", "5.972e24", ...],

...

]

}

// Data format I can put to database

[

{id: "1", name: "Sun", mass: "1.9891e30", ...},

{id: "2", name: "Earth", mass: "5.972e24", ...},

]

I’m also only loading a small subset of NASA's data, approximately 136k records out of 1.3M. The subset meets my needs and includes only the largest asteroids and comets.

Both data sources are saved into the same Snowflake schema, so the whole data ingestion boils down to a single command:

meltano run tap-rest-api-msdk tap-sbdb target-snowflake

Data transformation

Transformation is done with dbt.

Large bodies transformation consist of:

- Converting the data types, e.g. parsing the date field.

- Converting to the same units, e.g. mass in kg, semi-major orbital axes in astronomical units etc.

- Filtering the data to include only stars, planets, dwarf planets, and moons.

- Grouping the objects into planetary systems, i.e. grouping together planets and their moons by assigning the same attribute value to the

planetary_systemfield.

select

l1.ID,

l1.BODYTYPE as TYPE,

l1.ENGLISHNAME as NAME,

l1.GRAVITY,

l1.DENSITY,

l1.AVGTEMP as AVG_TEMP,

coalesce(

try_to_date(l1.DISCOVERYDATE, 'DD/MM/YYYY'),

try_to_date(l1.DISCOVERYDATE, 'YYYY'),

try_to_date(l1.DISCOVERYDATE, '??/MM/YYYY')

) as DISCOVERY_DATE,

l1.DISCOVEREDBY as DISCOVERED_BY,

l1.MEANRADIUS as MEAN_RADIUS,

l1.SEMIMAJORAXIS * 6.68459e-9 as SEMIMAJOR_ORBIT,

coalesce(l1.MASS_MASSVALUE, 0) * pow(10, coalesce(l1.MASS_MASSEXPONENT, 6) - 6) as MASS,

coalesce(l1.VOL_VOLVALUE, 0) * pow(10, coalesce(l1.VOL_VOLEXPONENT, 0)) as VOLUME,

coalesce(l2.ENGLISHNAME, l1.ENGLISHNAME) as PLANETARY_SYSTEM

FROM ANDY.PLUTO.BODIES AS l1

LEFT JOIN ANDY.PLUTO.BODIES AS l2 ON l1.AROUNDPLANET_PLANET = l2.id

WHERE l1.BODYTYPE IN ('Star', 'Planet', 'Dwarf Planet', 'Moon')

For small bodies the transformation is similar, but I also grouped the objects by their distance from the Sun, making it easier to plot on a chart.

select

ID,

trim(coalesce(NAME, FULL_NAME)) as NAME,

iff(NEO = 'Y', true, false) as NEO,

iff(PHA = 'Y', true, false) as PHA,

try_to_decimal(GM, 10, 5) / SQUARE(DIAMETER / 2) * 1000 as G,

try_to_decimal(DIAMETER, 10, 5) / 2 as MEAN_RADIUS,

try_to_decimal(A, 10, 5) as SEMIMAJOR_ORBIT,

iff(SUBSTR(KIND, 1, 1) = 'a', 'Asteroid', 'Comet') as TYPE,

CASE

WHEN SEMIMAJOR_ORBIT < 2.0 THEN '0.0-2.0'

WHEN SEMIMAJOR_ORBIT < 2.1 THEN '2.0-2.1'

WHEN SEMIMAJOR_ORBIT < 2.2 THEN '2.1-2.2'

WHEN SEMIMAJOR_ORBIT < 2.3 THEN '2.2-2.3'

WHEN SEMIMAJOR_ORBIT < 2.4 THEN '2.3-2.4'

WHEN SEMIMAJOR_ORBIT < 2.5 THEN '2.4-2.5'

WHEN SEMIMAJOR_ORBIT < 2.6 THEN '2.5-2.6'

WHEN SEMIMAJOR_ORBIT < 2.7 THEN '2.6-2.7'

WHEN SEMIMAJOR_ORBIT < 2.8 THEN '2.7-2.8'

WHEN SEMIMAJOR_ORBIT < 2.9 THEN '2.8-2.9'

WHEN SEMIMAJOR_ORBIT < 3.0 THEN '2.9-3.0'

WHEN SEMIMAJOR_ORBIT < 3.1 THEN '3.0-3.1'

WHEN SEMIMAJOR_ORBIT < 3.2 THEN '3.1-3.2'

WHEN SEMIMAJOR_ORBIT < 3.3 THEN '3.2-3.3'

WHEN SEMIMAJOR_ORBIT < 3.4 THEN '3.3-3.4'

WHEN SEMIMAJOR_ORBIT < 3.5 THEN '3.4-3.5'

ELSE '3.5+'

END AS DISTANCE_SPREAD

from ANDY.PLUTO.SBDB

Some of these transformations could easily be achieved with MAQL - GoodData’s metric defining language. But since we are going to materialize transformed data into a separate table in the database, we may as well do it with dbt.

Once again, running transformation takes a single command:

meltano --environment dev run dbt-snowflake:run

Analytical layer

For starters, the GoodData server needs to know how to connect to the database. This set up is straightforward with the Python SDK - simply read Meltano’s connection config and create a corresponding data source on the server. This task must be executed whenever there’s an update in the database setup.

# Parse args

parser = ArgumentParser()

parser.add_argument('-p', '--profile', help='Profile name', default='dev')

args = parser.parse_args()

# Load GD config and instantiate SDK

config = GDConfig.load(Path('gooddata.yaml'), args.profile)

sdk = GoodDataSdk.create(config.host, config.token)

# Load datasource details

snowflake_password = get_variable('.env', 'TARGET_SNOWFLAKE_PASSWORD')

with open('meltano.yml') as f:

meltano_config = yaml.safe_load(f.read())

snowflake_config = next(x for x in meltano_config['plugins']['loaders'] if x['name'] == 'target-snowflake')['config']

# Deploy the datasource

data_source = CatalogDataSourceSnowflake(

# Fill in properties from snowflake_config and config

)

sdk.catalog_data_source.create_or_update_data_source(data_source)

Next, we need to notify the GoodData server when there is new data, so it can clear cache and load new data for users. This can also be done with Python SDK.

# Parse args

parser = ArgumentParser()

parser.add_argument('-p', '--profile', help='Profile name', default='dev')

args = parser.parse_args()

# Load GD config and instantiate SDK

config = GDConfig.load(Path('gooddata.yaml'), args.profile)

sdk = GoodDataSdk.create(config.host, config.token)

# Clear the cache

sdk.catalog_data_source.register_upload_notification(config.data_source_id)

Finally, we need to define the analytical layer: logical data model, metrics, insights and dashboards - all done with GoodData for VS Code. For example, here is how a simple donut chart might look like in our syntax:

id: neo

type: donut_chart

title: NEO (Near Earth Objects)

query:

fields:

small_body_count:

alias: Small Body Count

title: Count of Small Body

aggregation: COUNT

using: attribute/small_body

neo: label/small_body_neo

metrics:

- field: small_body_count

format: "#,##0"

view_by:

- neo

In the real world, you might want to leverage the Python SDK for all the heavy lifting. Imagine you’d want to distribute analytics to several customers with data filters applied per tenant. Then you’d start with the GoodData for VS Code as a template project to develop the core of your analytics and personalize it per customer with Python SDK as part of your CI/CD pipeline.

We’ve recently released a new Python package - gooddata-dbt - and it can do even more for you. For example, it automatically generates logical data models based on dbt models. We’ll definitely look into interoperability between gooddata-dbt and GoodData for VS Code in the future, but for now feel free to check out an ultimate data pipeline blueprint from my colleague Jan Soubusta.

To deploy the analytics you’ll need to run a few commands:

# To create or update the data source

python ./scripts/deploy.py --profile dev

# To clear DB cache

python ./scripts/clear_cache.py --profile dev

# To deploy analytics

gd deploy -- profile dev

Public demo

The demo is available publicly, for everyone to play with. In general, GoodData does not support public dashboards without any authentication. As a workaround, I’m using a proxy that opens a subset of our REST API to the public and also does some caching and rate limiting along the way to avoid potential abuse.

I’m using GitHub pages, to host the web page. It’s by far the easiest way to host any static content on the web - just put what you need in the `/docs` folder of your repo and enable the hosting in the repo settings.

To embed my dashboards into a static HTML page I’m using GoodData’s WebComponents.

<!-- Load the script with components -->

<script type="module" src="https://public-examples.gooddata.com/components/pluto.js?auth=sso"></script>

<!-- Embed the dashboard -->

<gd-dashboard dashboard="pluto" readonly></gd-dashboard>

Just two lines of code. Pretty neat, right?

The pipeline

With my code on GitHub, it’s only natural to use GitHub Actions for CI/CD and data pipelines.

It’s generally not a great idea to combine data pipeline and CI/CD deployment into a single pipeline - it can be long and resource intensive.. Ideally, you want to run it as a response to the changes in the ETL (Extract, Transform, Load) configuration or data in the data source.

The CI/CD pipeline should run whenever there are changes in the analytics-related files. In the real world, it’s certainly possible to have a smart pipeline that runs different tasks depending on which folder was updated, but for sake of simplicity I’ve configured a set of tasks with manual triggers.

What’s next?

While it’s already possible to build a solid pipeline for an Analytics as Code project using GoodData tools, we can still do a lot more to simplify the data pipeline setup and maintenance.

Here are a few improvements we could potentially do:

- Use

python-dbtlibrary to automatically update logical data models whenever dbt models are updated. - Build a Meltano plugin, making it easier to invoke GoodData deployment as a part of your data pipeline.

- Build an integration between Python SDK and GoodData for VS Code, enabling Python SDK to load and manipulate the analytical project directly from YAML files.

What do you think would be the best next step?

Conclusion

As always, I’m eager to hear what you think about the direction we are taking with Analytics as Code. Feel free to reach out to me on GoodData community Slack channel.

Want to try it out for yourself? Here are a few useful links to get you started:

- GitHub repository with this project and a README to get you started.

- A live demo with Pluto and Solar System dashboards.

- An article about Pluto.

- You’ll need a GoodData account to run the project. Consider using our free trial.

Written by Andrii Chumak |

Subscribe to our newsletter

Get your dose of interesting facts on analytics in your inbox every month.

Subscribe