Integrate with CI/CD Pipeline

It is good practice to apply software engineering best practices and work with analytics as with code. Thanks to GoodData Python SDK and the API-first approach, it is simple.

In this article, you will learn how to build a simple GitHub CI/CD pipeline (e.g. GitHub Actions) that provisions analytics from one workspace to another with just a few lines of code.

Are you interested in analytics automation with CI/CD? Check the article how to automate data analytics using CI/CD.

Steps:

For successful completion, you must have a GitHub repository where your pipeline will run.

Prepare python script that will run in a GitHub Action.

The script creates the new workspace

production, and it takes analytics from thedemoworkspace and puts it into theproductionworkspace.from gooddata_sdk import GoodDataSdk, CatalogWorkspace # GoodData host in the form of uri eg. "http://localhost:3000" host = "http://localhost:3000" # GoodData API token token = "<API_TOKEN>" demo_workspace_id = "demo" production_workspace_id = "production" sdk = GoodDataSdk.create(host, token) # Create workspace sdk.catalog_workspace.create_or_update( CatalogWorkspace(production_workspace_id, production_workspace_id) ) # Get LDM (Logical Data Model) from demo workspace declarative_ldm = sdk.catalog_workspace_content.get_declarative_ldm( demo_workspace_id ) # Get analytics model (metrics, dashboards, etc.) from demo workspace declarative_analytics_model = sdk.catalog_workspace_content.get_declarative_analytics_model( demo_workspace_id ) # Put LDM (Logical Data Model) to production workspace sdk.catalog_workspace_content.put_declarative_ldm( production_workspace_id, declarative_ldm ) # Put analytics model (metrics, dashboards, etc.) to production workspace sdk.catalog_workspace_content.put_declarative_analytics_model( production_workspace_id, declarative_analytics_model )Define workflow in

YAML file.The workflow creates an environment for the python script from the previous step and runs the script.

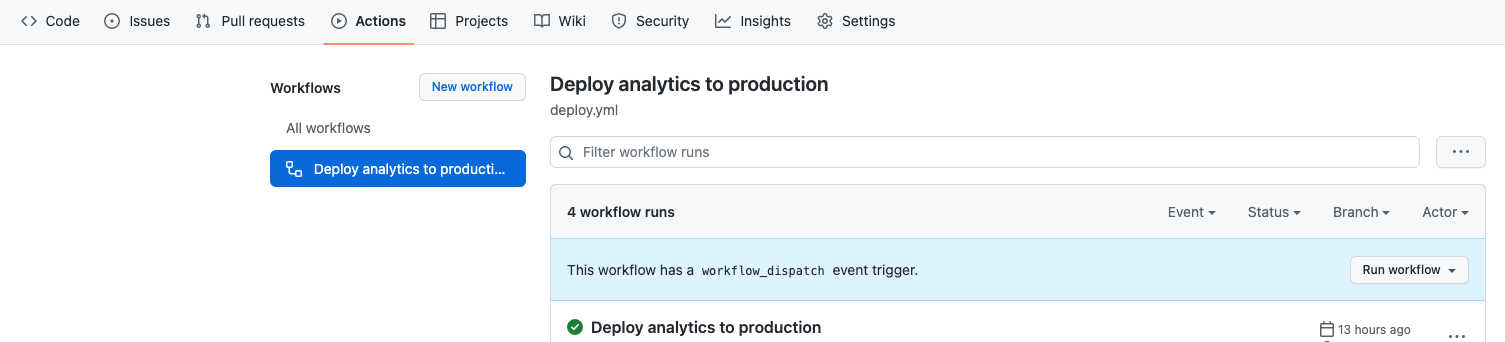

name: Deploy analytics to production on: workflow_dispatch: inputs: name: description: 'Deploy analytics to production' required: false jobs: build: runs-on: ubuntu-latest steps: - uses: actions/checkout@v2 - name: Set up Python 3.8 uses: actions/setup-python@v2 with: python-version: 3.8 - name: Install GoodData Python SDK run: | python -m pip install --upgrade pip pip install gooddata-sdk - name: Run deploy script run: python deploy.pyPush and test the GitHub Action.

You can now push the script to the root of your repository and name it

deploy.py. For theYAML file, push it in the.github/workflowsfolder with namedeploy.yml.If everything is done properly, you will see in the GitHub action

Deploy analytics to production.

The example with CI/CD mentioned in this tutorial is simple, but you can do a lot of stuff to automate analytics with GoodData Python SDK or APIs.

Willing to see more CI/CD examples? Check the CI/CD GitHub repository.